$500 Billion Is Just the Start: NVIDIA’s Bold Bet, Amazon’s Silicon Siege, and Marvell’s Photon Gamble

Welcome, AI & Semiconductor Investors,

Is NVIDIA’s massive $500 billion AI runway actually underselling its potential? While CFO Colette Kress casually dropped a bombshell that a mega-deal with OpenAI isn’t even on the table, Amazon revealed it’s quietly built Trainium into a multi-billion-dollar powerhouse. Meanwhile, Marvell just placed a $5.5 billion bet that the future of AI connectivity depends on switching from copper to photonics. — Let’s Chip In.

What The Chip Happened?

🔥 NVIDIA’s CFO Spills the Tea: $500 Billion Is Just the Warm-Up

⚡ AWS Flexes Its Chip Muscle: Trainium Goes Multi-Billion, AI Factories Come Home

🔮 Marvell Goes All-In on Light: $5.5B Bet on Photonics Signals AI’s Next Infrastructure Wave

Read time: 7 minutes

Join WhatTheChippHappened Community — 33% OFF Annual Use Code CYBER

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Nvidia (NASDAQ: NVDA)

🔥 NVIDIA’s CFO Spills the Tea: $500 Billion Is Just the Warm-Up

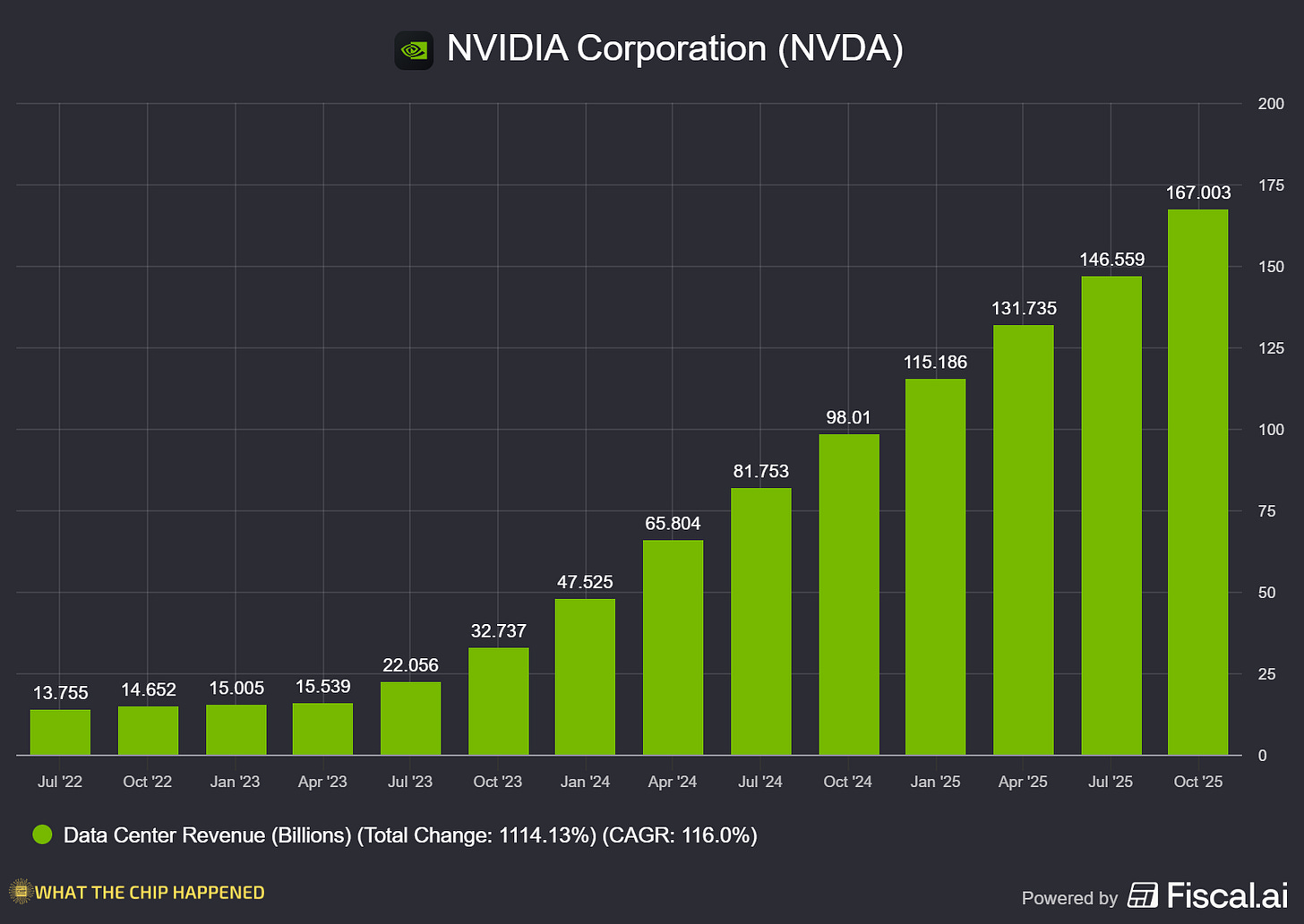

What The Chip: At the UBS Global Technology Conference on December 2nd, 2025, NVIDIA CFO Colette Kress sat down for a fireside chat that turned into a masterclass in managing investor expectations, firmly rejecting the “AI bubble” narrative while revealing that the company’s massive $500 billion revenue visibility through 2026 doesn’t even include a potential blockbuster direct deal with OpenAI.

Details:

💰 The $500 Billion Floor, Not Ceiling: Kress confirmed that NVIDIA’s cumulative $500 billion Blackwell and Vera Rubin visibility through 2026 represents only what’s coming through cloud service providers. The analyst suggested this translates to roughly $350-400 billion in calendar 2026 revenue, and Kress didn’t push back. More importantly, she revealed: “Right now, that $500 billion does not include any of the work that we’re doing right now on the next part of the agreement with OpenAI.” That’s a potentially massive deal sitting on the sidelines.

📦 Inventory Surge Signals Locked-In Demand: One standout data point: NVIDIA’s combined inventory and purchase commitments jumped $25 billion in a single quarter, up from the usual ~$2 billion quarterly increase. Kress indicated most of that inventory has already shipped to customers as of early December, hard evidence of near-term revenue visibility rather than speculative stockpiling.

⚠️ The OpenAI Elephant in the Room: The analyst pressed hard on the 10-gigawatt OpenAI letter of intent, which UBS estimates could be worth $400 billion over the life of the deal. Kress acknowledged: “Yes, we still have not completed a definitive agreement, but we’re working with them.” OpenAI wants to go direct rather than through hyperscalers, a major strategic shift, but until ink hits paper, this remains upside risk, not certainty.

🛡️ CUDA Moat Gets Quantified: Kress revealed that software alone contributes 2X of Blackwell’s 10-15X performance improvement, a rare quantification of NVIDIA’s software advantage. CUDA is now on its 13th version with backward and forward compatibility. She was emphatic: “Today, everybody is on our platform. All models are on our platform, both in the cloud as well as on-premise.”

📉 Gross Margins Holding Steady: Despite concerns about HBM cost pressures and rising bill of materials, Kress committed to maintaining mid-70s gross margins into next year. She cited improvements in cycle times, yields, and manufacturing costs, noting that even “one more day of efficiency” at NVIDIA’s scale moves the needle significantly.

🔮 Vera Rubin Has Taped Out: The next-generation chip is no longer vaporware. Kress confirmed: “Vera Rubin, we’re pleased to say that it has been taped out. We have the chips and are working feverishly right now to get ready for the second half of next year.” She expects another “X factor increase in performance.”

🚩 Model Builder Financing Concerns Linger: When pressed about model builders with limited revenue committing massive capacity, Kress acknowledged reality: “They’re gonna have to work through, have I earned enough in terms of profitability? Can I raise more capital?” She emphasized NVIDIA vets customers for purchase orders and ability to pay, but this doesn’t eliminate the risk that startup funding could dry up.

🌍 Middle East as Growth Vector: Kress hinted at sovereign AI infrastructure deals as upside to guidance, mentioning Middle East opportunities multiple times and teasing: “You may even hear about another one today as well.”

Why AI/Semiconductor Investors Should Care: This conversation revealed NVIDIA operating from a position of extraordinary strength, $500 billion in visible demand, supply chain commitments suggesting management has locked in hypergrowth through mid-2026, and competitive positioning that Kress described in near-monopolistic terms.

The unsigned OpenAI LOI represents both the biggest upside catalyst and the most significant execution risk; if that deal closes as a direct relationship, NVIDIA’s trajectory steepens further, but its absence from current guidance means the stock’s valuation already relies heavily on confirmed hyperscaler demand. For investors, the $25 billion inventory surge is the green flag that matters most, it’s not guidance or vision, it’s committed capital translating to imminent revenue.

Join WhatTheChippHappened Community — 33% OFF Annual Use Code CYBER

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Amazon (NASDAQ: AMZN)

⚡ AWS Flexes Its Chip Muscle: Trainium Goes Multi-Billion, AI Factories Come Home

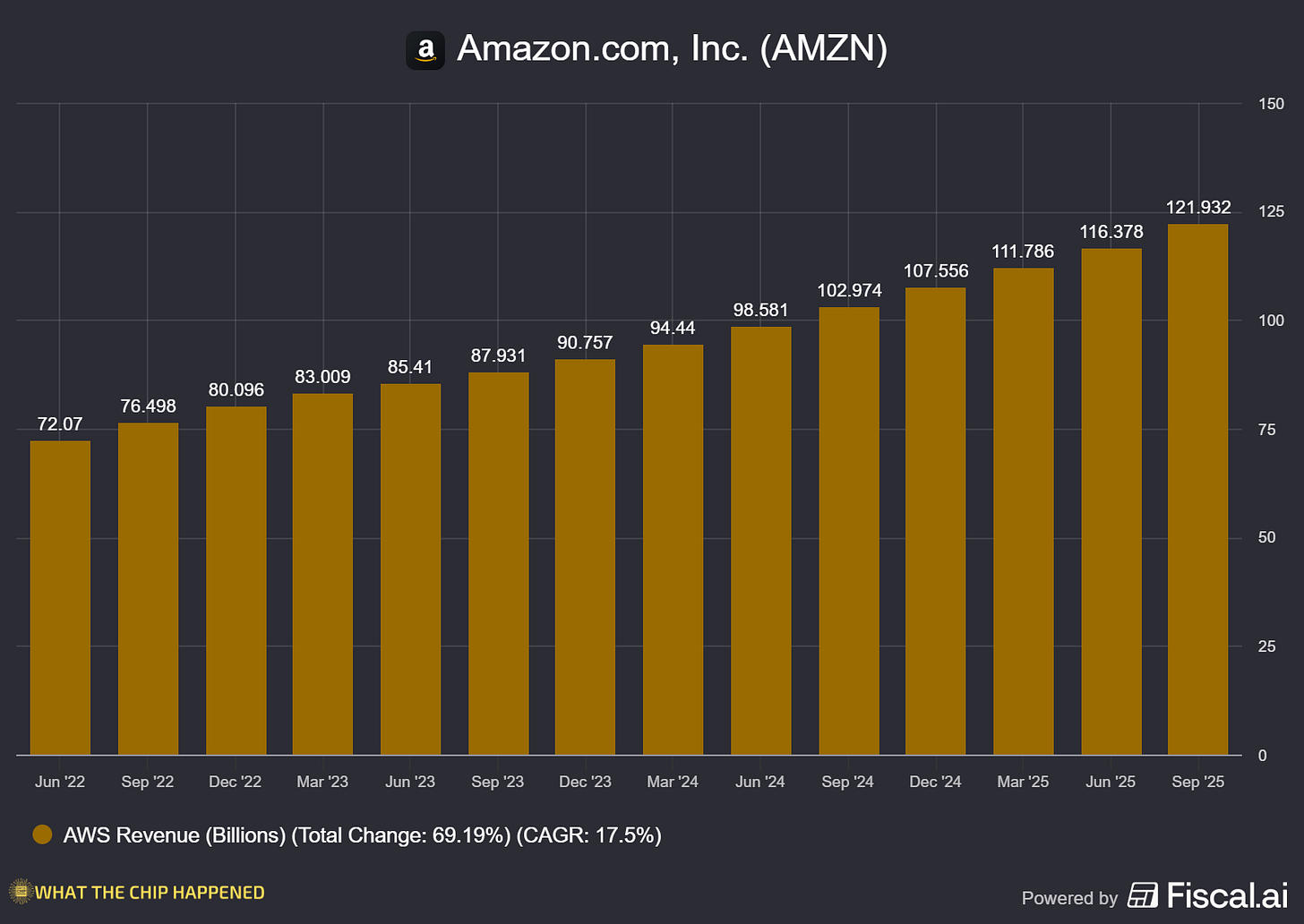

What The Chip: At AWS re:Invent 2025 on December 2nd, CEO Matt Garman unveiled a sweeping set of announcements that position Amazon as a vertically integrated AI powerhouse, from custom silicon to quantum computing to enterprise-grade AI agents, while revealing that its homegrown Trainium chips have quietly become a multi-billion dollar business powering the majority of Amazon Bedrock inference.

Details:

💰 AWS hit $132 billion in revenue, accelerating 20% year-over-year. Garman put the scale in perspective: “The amount we grew in the last year alone was about $22 billion. That absolute growth over the last 12 months is larger than the annual revenue of more than half of the Fortune 500.” The cloud giant added 3.8 gigawatts of data center capacity in 2024, more than any competitor globally.

🔥 Trainium is no longer a side project; it’s a juggernaut. AWS deployed over 1 million Trainium chips and ramped Trainium 2 volumes 4x faster than any previous chip in AWS history. Garman didn’t mince words: “We’re selling those as fast as we can make them. Trainium already represents a multi-billion dollar business today.” Even more striking: the majority of Amazon Bedrock inference now runs on Trainium. “If you’re using any of Claude’s latest generation models in Bedrock, all of that traffic is running on Trainium, which is delivering the best end-to-end response times compared to any other major provider.”

⚡ Trainium 3 Ultra servers hit general availability with AWS’s first 3-nanometer AI chip. The specs are staggering: 4.4x more compute, 3.9x memory bandwidth, and 5x more AI tokens per megawatt versus Trainium 2. The largest configurations pack 144 Trainium 3 chips delivering 362 FP8 petaflops of compute with 700+ terabytes per second of aggregate bandwidth. AWS can scale these clusters to hundreds of thousands of chips.

🚀 AWS previewed Trainium 4, already in active development. Projections show 6x FP4 compute performance, 4x memory bandwidth, and 2x high-bandwidth memory capacity versus Trainium 3, designed to handle the largest models in existence.

🏭 AWS AI Factories bring AWS infrastructure into customer data centers. Think of it as a private AWS region: customers leverage their own real estate and power while getting access to Trainium 3 UltraServers, NVIDIA GPUs, SageMaker, and Bedrock. This directly addresses enterprises with existing data center investments who want AWS’s AI stack without migrating workloads.

🟢 NVIDIA remains a key partner. AWS announced P6e GB300 instances powered by NVIDIA’s GB300 NVL72 systems, delivering over 20x the compute compared to previous-generation P5en instances. AWS continues positioning itself as a multi-vendor shop rather than going all-in on Trainium alone. Tranium 4 will be using Nvidia’s NVLINK Fusion technology.

🤖 Nova 2 models arrived with competitive positioning against frontier models. Nova 2 Pro targets agentic workloads, matching or beating GPT-5.1, Gemini 3 Pro, and Claude 4.5 Sonic on instruction following and tool use benchmarks according to Artificial Analysis. Nova 2 Omni breaks new ground as the industry’s first reasoning model supporting text, image, video, and audio input with text and image output.

⚛️ AWS unveiled Ocelot, its first quantum computing chip prototype, claiming it reduces quantum error correction costs by over 90%, a potential breakthrough for making quantum computing commercially viable.

🔵 Intel and AMD get new instances too. C8a instances (AMD EPYC) deliver 30% higher performance, while C8ine instances (Intel Xeon 6) offer 2.5x higher packet performance per vCPU. For the third consecutive year, over half of new CPU capacity came from AWS’s own Graviton processors, signaling continued in-house silicon momentum.

Why AI/Semiconductor Investors Should Care: The Trainium deployment numbers, 1 million chips, 4x faster ramp than any prior chip, majority of Bedrock inference, suggest AWS has cracked both the manufacturing scale and software optimization challenges that plague custom silicon efforts. For NVIDIA investors, the P6e GB300 announcement confirms AWS remains a massive customer, but the explicit Trainium-vs-GPU competitive positioning and the “tokens per megawatt” efficiency metrics signal AWS is building optionality to reduce GPU dependence over time but crucial to note the TRN4 will use Nvidia’s technology.

Garman’s framing of AI agents as the inflection point where “technical wonder” becomes “real value” echoes a broader industry concern: enterprises have spent heavily on AI infrastructure but haven’t seen proportional returns. If agentic AI delivers on that promise, the 3.8 GW of capacity AWS added becomes revenue-generating infrastructure. If it doesn’t, hyperscaler spending becomes a harder sell to shareholders.

Marvell (NASDAQ: MRVL)

🔮 Marvell Goes All-In on Light: $5.5B Bet on Photonics Signals AI’s Next Infrastructure Wave

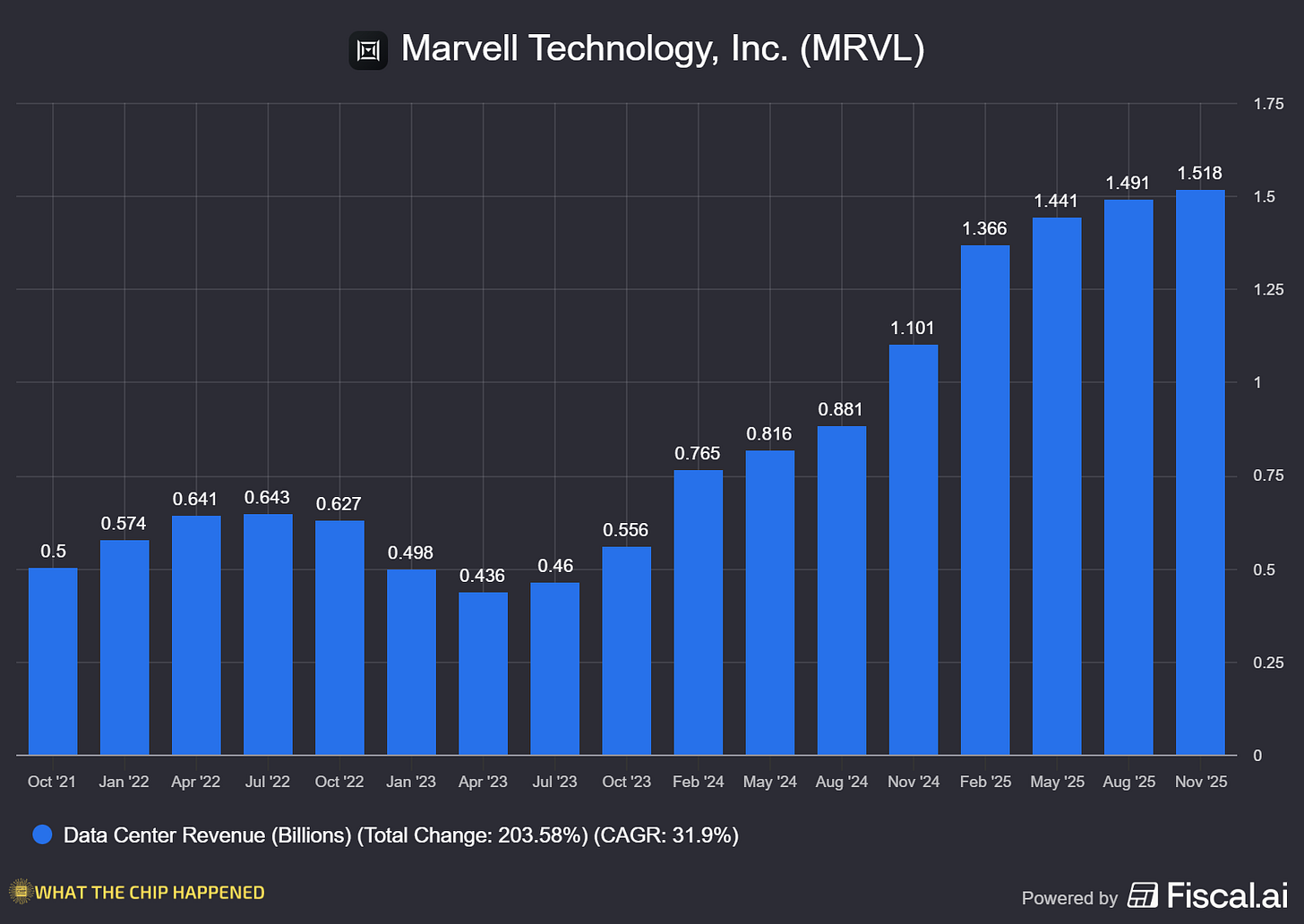

What The Chip: Marvell announced Q3 FY2026 earnings on December 2, 2025, beating expectations with $2.075 billion in revenue (up 37% YoY) while simultaneously revealing a transformational $3.25 billion acquisition of Celestial AI—a photonics startup whose optical interconnect technology has already landed a major hyperscaler design win that Marvell believes will reshape how AI data centers are built.

Details:

💡 The Celestial AI deal is structured as $3.25 billion upfront plus up to $2.25 billion in contingent payments tied to revenue milestones through FY29. For context, this mirrors Marvell’s 2021 Inphi acquisition, which CEO Matt Murphy called “an absolute home run.” Celestial’s photonic fabric technology delivers greater than 2x power efficiency versus copper interconnects with nanosecond-class latency, critical as AI clusters scale and electrical connections hit physical limits. Revenue contributions begin in the second half of FY28, targeting a $500 million annualized run rate by Q4 FY28 and $1 billion by Q4 FY29.

📈 Management significantly raised their outlook. FY27 data center revenue growth expectations jumped to over 25% YoY (previously lower), driven by cloud CapEx growth now expected to exceed 30%. FY28 looks even stronger: data center revenue projected to accelerate to approximately 40% growth, with total company growth around 30%. Murphy explicitly stated these are “base case assumptions, not dream the dream”

🔌 The interconnect business (50% of data center revenue) is firing on all cylinders. 1.6T transceivers began shipping in H2 FY26 with “exceptionally strong demand.” AEC and retimer revenue is expected to more than double from FY26 to FY27. The company demonstrated 400 gig per lane technology for 3.2T transceivers at OFC, with production deployments expected in calendar 2028. Marvell claims it’s “first to market, first to ramp” consistently in optical categories.

🎯 The XPU attach market is emerging as a sleeper growth driver. Marvell has secured over 15 design wins with “line of sight to revenue exceeding $2 billion by fiscal 2029.” Five unique CXL sockets are secured across two Tier 1 U.S. hyperscalers, with the first already entering volume production. Murphy noted attach rates are “exceeding our initial expectations” as hyperscalers plan to deploy custom NICs across AI server fleets that “can exceed 1 million units or more annually” at large customers.

⚠️ The custom business (25% of data center) carries concentration risk. Management acknowledged it’s tied to “a handful of programs today” with a product transition occurring at the lead customer. The second major XPU customer won’t contribute meaningfully until FY28, and Murphy noted this customer “doesn’t have a history of ramping big ASIC programs.” Growth guidance of “at least 20%” reflects this caution, with Q3 showing a sequential decline “due to lumpiness in demand.”

📊 Q3 financials were solid: non-GAAP EPS of $0.76 (beat by $0.02), non-GAAP gross margin of 59.7%, and operating cash flow of $582 million. The company executed $1 billion in accelerated stock repurchases plus $300 million in ongoing buybacks. Q4 guidance calls for $2.2 billion in revenue (up 21% YoY as reported, 24% excluding the divested automotive business).

Why AI/Semiconductor Investors Should Care: Marvell is positioning itself as the “one-stop shop” for everything inside the AI rack, interconnects, switches, custom XPUs, XPU attach solutions, and storage, rather than competing on individual component specs. This rack-level strategy creates meaningful switching costs and deeper customer relationships, which explains why hyperscalers are providing multi-year visibility that enables Marvell to guide two full years out with unusual confidence.

The Celestial AI acquisition bets on copper-to-optics transition as AI clusters scale beyond electrical interconnects’ physical limits, targeting an estimated $10+ billion addressable market by 2030. The risk? Celestial represents an unproven commercial deployment at scale, and the aggressive earnout signals both opportunity and execution pressure. As Murphy put it: “We are everywhere in the AI rack. And we are just getting started.”

Youtube Channel - Jose Najarro Stocks

X Account - @_Josenajarro

Join WhatTheChippHappened Community — 33% OFF Annual Use Code CYBER

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Disclaimer: This article is intended for educational and informational purposes only and should not be construed as investment advice. Always conduct your own research and consult with a qualified financial advisor before making any investment decisions.

Good Job Jose!

This article comes at a perfect time! I wonder, is $500B Nvidia truly only from cloud providers?