AMD's $1T Quest, Nebius' Mega Meta Win & CoreWeave's $55B Backlog Bonanza

Welcome, AI & Semiconductor Investors,

Could AMD realistically capture a slice of a trillion-dollar market? CEO Lisa Su unveiled a daring roadmap, targeting over $100 billion in data-center revenue powered by multi-gigawatt AI mega-deals with OpenAI and Oracle. Meanwhile, Nebius’ Meta and Microsoft contracts spotlight a critical truth: that AI infrastructure is sold out and constrained, pushing hyperscalers into multi-billion-dollar commitments. And CoreWeave? With a staggering $55.6 billion backlog, they’re doubling down on infrastructure despite short-term snags, signaling sustained and explosive demand ahead. — Let’s Chip In.

What The Chip Happened?

🔥 AMD: Helios, OpenAI & a $1T TAM — the growth math gets real

🧠 “Sold‑Out Cloud” energy: Meta + Microsoft deals power a 2026 sprint

🌐 From Backlog to Backstop: Q3 demand soars, one data‑center snag nudges 2025 into ’26

Read time: 7 minutes

NEW SEMICONDUCTOR COMMUNITY — 50% OFF FOUNDING RATE

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

AMD (NASDAQ: AMD)

🔥 AMD: Helios, OpenAI & a $1T TAM — the growth math gets real

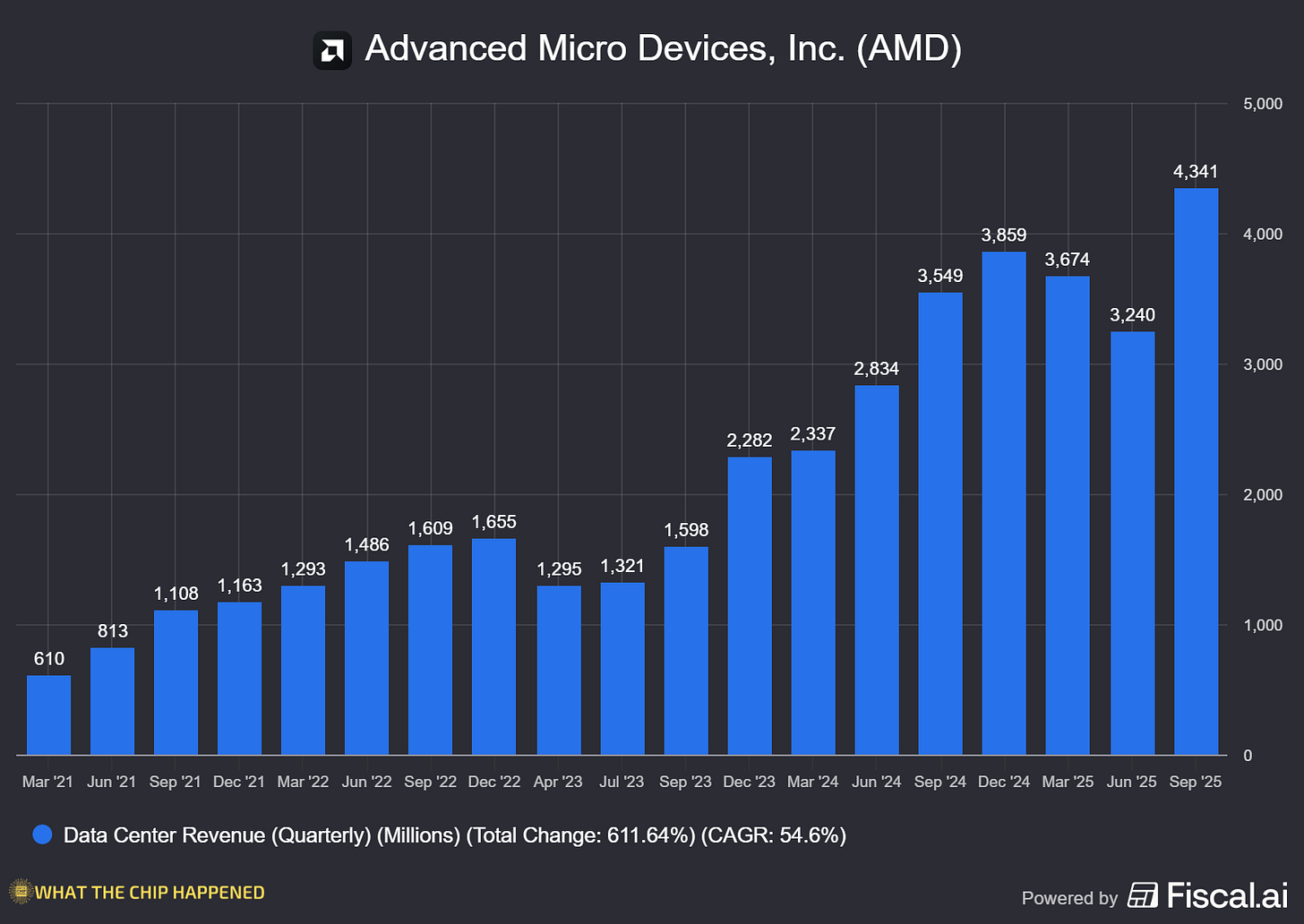

What The Chip: On November 11, 2025, AMD laid out a bold Analyst Day plan: >35% company‑wide revenue CAGR for the next 3–5 years, non‑GAAP EPS > $20, and a sprint to AI data‑center scale anchored by MI450 “Helios” in Q3’26 and MI500 in 2027. Management framed the prize as a $1T compute market by 2030 with a line of sight to $100B+ annual data‑center revenue within five years.

Details:

📊 “Money slides” that matter. AMD targets >35% revenue CAGR, non‑GAAP operating margin >35%, and non‑GAAP EPS >$20 over 3–5 years. Segmentally, data center grows >60% CAGR and data‑center AI >80%. AMD also shared a 55–58% gross‑margin band and >25% FCF margin for the model. Quote: “AMD is entering a new era of growth…” — CEO Lisa Su. We have never been better positioned.

🖥️ $1T TAM, $100B run‑rate ambition. Su put data‑center compute on a path to $1T by 2030 and said AMD can scale data‑center revenue to ~$100B annually within five years—underscoring how AI training/inference is driving a secular capex wave.

🤝 Mega‑deals de‑risk the ramp.

OpenAI: multi‑year, multi‑generation pact to deploy 6 gigawatts of AMD GPU capacity, starting with 1 GW in 2H’26 on MI450.

Oracle: 50,000 MI450 GPUs begin rolling into OCI in Q3’26, expanding in 2027 and beyond. Quote: “Together, AMD and Oracle are accelerating AI with open, optimized, and secure systems built for massive AI data centers.” — Forrest Norrod (EVP, AMD).

U.S. Dept. of Energy (ORNL): two AMD‑accelerated AI supercomputers (Lux in early 2026; Discovery later) extend sovereign/scientific AI momentum.

🧱 Helios + MI450 (Q3’26) → MI500 (’27): rack‑scale, memory‑heavy AI. Helios is AMD’s open, rack‑scale platform: 72 GPUs per rack, 432GB HBM4 per GPU, and up to 20TB/s memory bandwidth per accelerator—translating to bigger models in‑memory and less sharding. AMD says Helios delivers rack‑level performance leadership, and the roadmap steps again with MI500 in 2027.

🧠 CPU cadence & process lead‑ins. The next‑gen EPYC “Venice” CPU—TSMC N2 (2nm)—is on the Helios bill of materials, lining up with MI400‑class deployments in 2026. (Why it matters: more cores/threads per socket and better perf/W shrink total cluster count per job.)

💻 AI PC scale‑out. Ryzen’s AI PC portfolio grew 2.5× since 2024 and now sits in 250+ platforms, with adoption at over half of the Fortune 100. AMD guides a ~10× improvement in on‑device AI performance by 2027 with “Gorgon” and “Medusa.” (Translation: local copilots get far faster, cheaper to run.).

🧰 Open software flywheel. ROCm downloads surged ~10× YoY, and AMD keeps adding features/perf across releases. The open stack (ROCm + UALink + Ultra Ethernet‑aligned fabric) is a practical way to lower switching costs from CUDA‑first estates.

⚠️ What could go wrong (and what to watch):

Supply/complexity risk: Helios depends on HBM4, liquid cooling, and 800G‑class fabrics at scale; execution, yields, and serviceability will matter.

Policy & China exposure: 2025 export rules hurt shipments/margins; AMD’s Q4’25 outlook embeds ~54.5% non‑GAAP GM as a near‑term reality versus the long‑term 55–58% target.

Competition: Nvidia’s next racks (Vera Rubin/OBERON‑class) set a high bar; AMD’s open approach must translate to time‑to‑train, memory fit, and TCO wins in customer POCs.

Why AI/Semiconductor Investors Should Care

This isn’t just “another node + another GPU.” The 6‑GW OpenAI commitment, 50k‑GPU Oracle cluster, and sovereign AI wins give AMD multi‑year shipment visibility and prove customers will buy an open, memory‑rich, rack‑scale alternative when it hits real‑world perf/TCO. If AMD executes Helios + MI450 on time and ROCm keeps compilers/kernels in sync, the model supports margin expansion toward 55–58% GM and a credible path to double‑digit AI accelerator share—with $100B data‑center revenue the north star. Just remember the timing: the heavy lift starts 2H’26, so near‑term numbers still ride MI350 and EPYC share gains while policy/supply remain watch items.

NEW SEMICONDUCTOR COMMUNITY — 50% OFF FOUNDING RATE

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Nebius Group (NASDAQ: NBIS)

🧠 “Sold‑Out Cloud” energy: Meta + Microsoft deals power a 2026 sprint

What The Chip: On Nov 11, 2025, Nebius said it inked a $3B / 5‑year AI‑infrastructure deal with Meta, on the heels of its $17.4–$19.4B Microsoft contract. Management stressed demand was “overwhelming” and the Meta deal size was capped by available capacity, not appetite.

🤝 Mega‑deals as accelerants, not the end game. Meta’s $3B and Microsoft’s $17.4–$19.4B agreements validate Nebius with tier‑1 buyers and act as financing flywheels for the core AI cloud. As the shareholder letter puts it, these partnerships are “commercial and financial accelerators” for the cloud business. Quote (CEO Arkady Volozh): demand for Meta capacity was “overwhelming… limited to the amount of capacity that we had available.”

⚡ Capacity is the constraint—and the unlock. Nebius lifted its contracted power target from 1 GW to >2.5 GW by YE26, with 800 MW–1 GW connected by then (220 MW connected by YE25). That’s the gating item for revenue in 2025–26.

📈 2026 revenue runway got longer. Management now targets $7–$9B ARR by YE26 (ARR = annualized run‑rate revenue), up from $551M ARR exiting September. “Sold out” remained the mode in Q3.

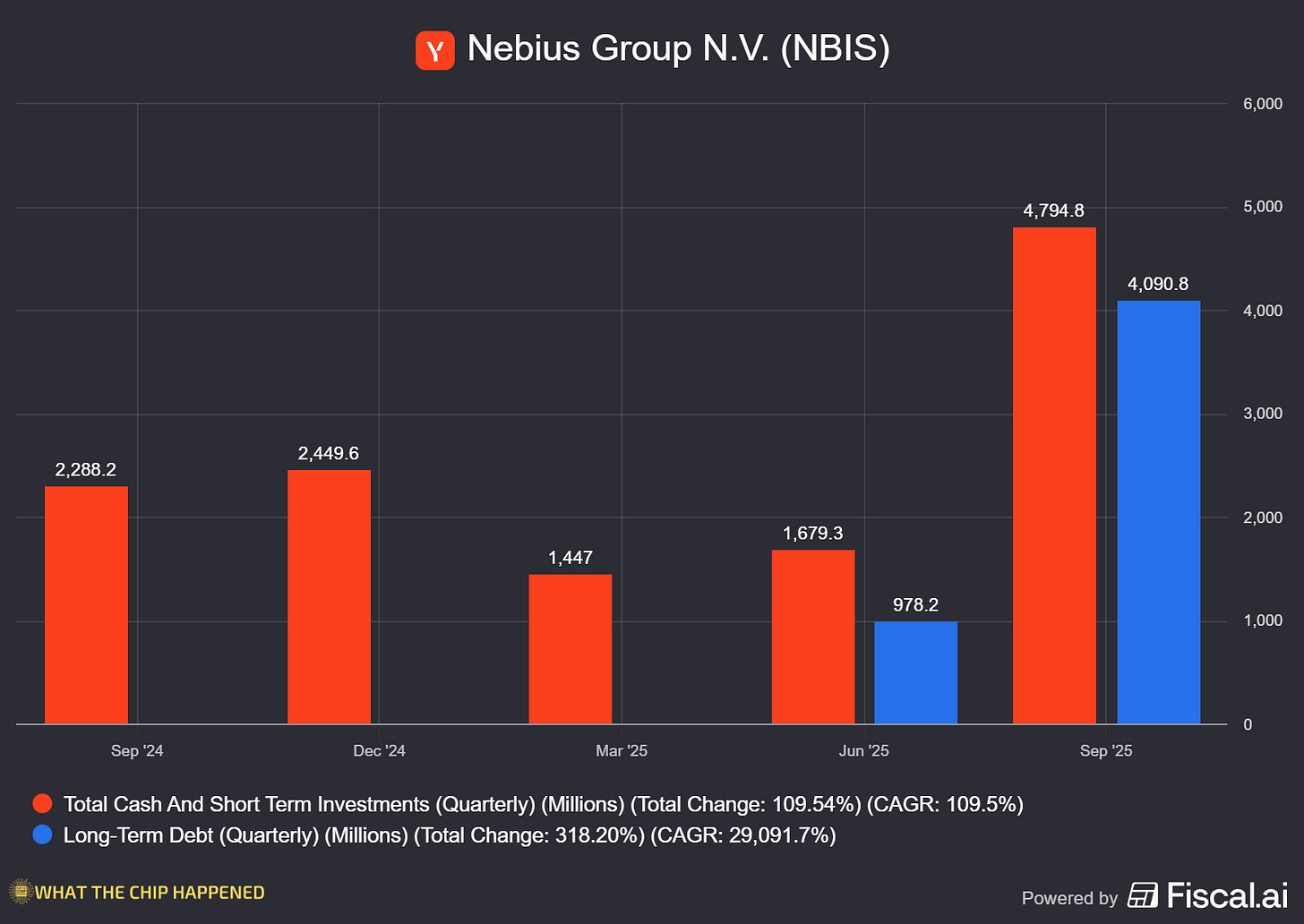

💸 CapEx throttle‑up + staged risk control. 2025 CapEx guidance jumped to ~$5B (from ~$2B) to lock down hardware, power, land and sites. CFO also reiterated a three‑stage build—land & power → shell & facilities → GPUs—to avoid overspending and preserve flexibility if the market shifts. Cash stood at $4.8B (9/30/25) after raising $4.3B via converts + equity in September.

📊 Quarter in numbers (Q3’25): Revenue $146.1M (+355% Y/Y, +39% Q/Q); adjusted EBITDA loss $(5.2)M (an 89% Y/Y improvement). Core AI infrastructure (~90% of revenue) grew ~400% Y/Y / 40% Q/Q with ~19% adjusted EBITDA margin. Cost structure tightened: cost of rev 29% of sales, R&D 31% (vs 98%), SG&A 61% (vs 149%).

🧾 Guidance keeps tightening. For 2025, Nebius reiterated $500–$550M revenue and $900M–$1.1B year‑end ARR; management still expects adjusted EBITDA to turn slightly positive at group level by year‑end (full‑year still negative).

🧱 Execution risk = timing risk. Management said Q3 landed at the midpoint because revenue is tied to the exact timing of capacity going live. CFO Dado Alonso flagged incremental ARR of ~$12M in Q3 (vs $180M in Q2) due to supply constraints—underscoring how tightly growth couples to deployment.

🧩 Enterprise software push (Aether 3.0 + Token Factory). Nebius rolled out Aether with SOC 2 (incl. HIPAA) and ISO 27001 certifications plus enterprise‑grade IAM/observability, and launched Token Factory to industrialize inference/post‑training. Management’s message: this is the foundation for a stronger 2026 enterprise mix.

🖥️ Technical edge: early Blackwell at scale. Nebius is among the first to deploy NVIDIA B200/B300 broadly (UK B300s; US/Israel B200s). It earned NVIDIA Exemplar Cloud status on H200 (training) and posted top MLPerf v5.1 inference marks: GB200 NVL72 up ~6.7% (offline) and ~14.2% (server) on Llama‑3.1‑405B vs prior bests; B200 delivered ~3× / ~4.3× tokens‑per‑second vs H200 in Nebius’ submissions. Customers include Cursor and Black Forest Labs.

Why AI/Semiconductor Investors Should Care

Nebius’ print reinforces a simple reality: AI compute is still supply‑constrained, and those who secure power, land, and Blackwell‑class GPUs early enjoy pricing power and multi‑year visibility. If Nebius executes its >2.5 GW plan and monetizes Microsoft/Meta capacity on schedule, the path to multi‑billion ARR becomes tangible—good read‑through for NVIDIA demand and for the broader AI data‑center supply chain. The flip side: this is capital‑intensive and timing‑sensitive; as more neoclouds/hyperscalers bring capacity online into 2026–27, pricing could compress—so watch build timing, financing mix, and unit margins closely.

CoreWeave (NASDAQ: CRWV)

🌐 From Backlog to Backstop: Q3 demand soars, one data‑center snag nudges 2025 into ’26

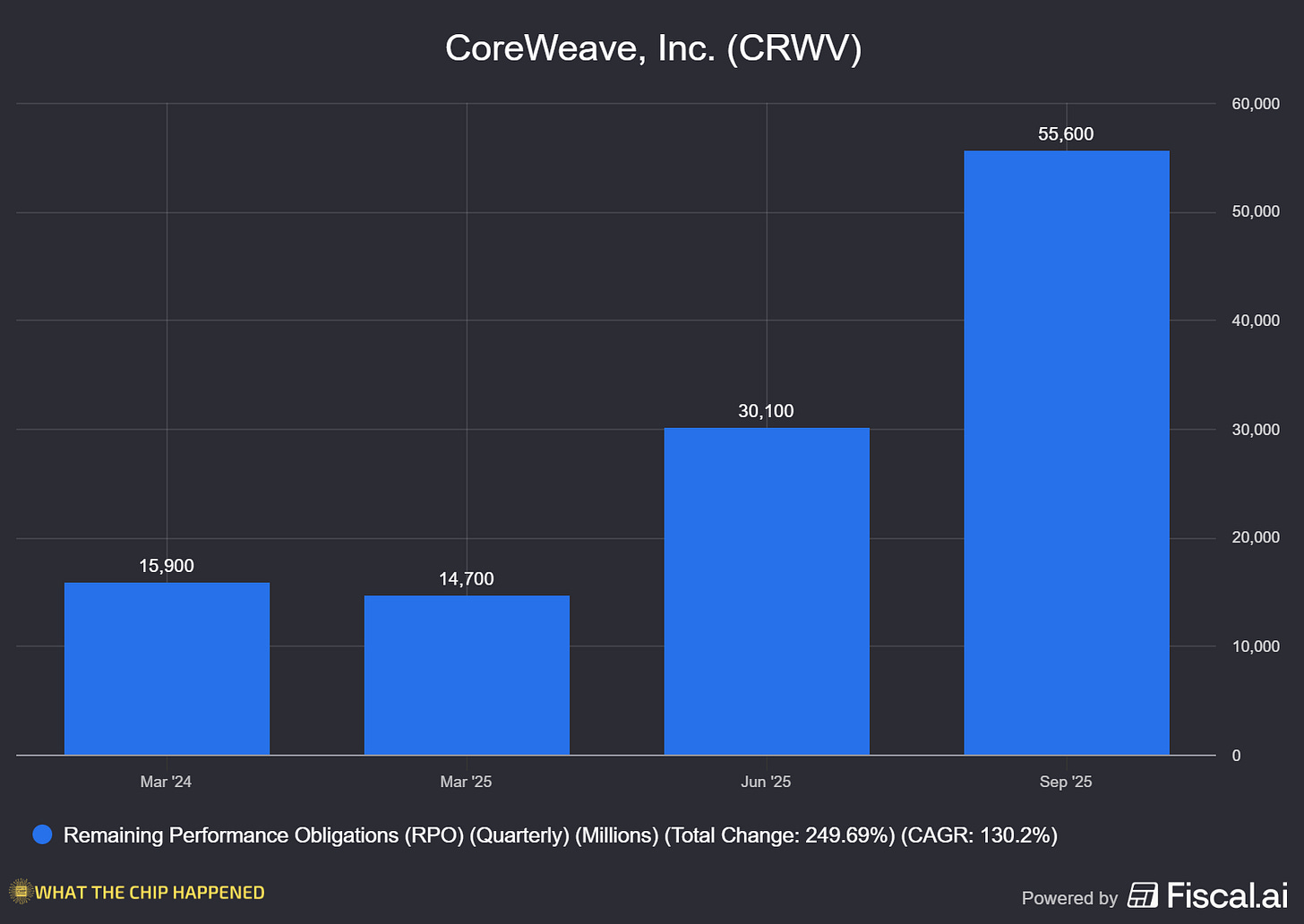

What The Chip: On Nov 10, 2025, CoreWeave posted record Q3 results and a $55.6B revenue backlog, then trimmed 2025 guidance after a third‑party data‑center developer slipped, pushing revenue into early 2026 rather than losing it. Management also flagged 2026 CapEx set to be well above double 2025 to fuel surging AI compute demand.

Details:

📈 Backlog goes parabolic. Contracted revenue backlog hit $55.6B (+271% YoY), propelled by multi‑year deals: Meta up to $14.2B, OpenAI +$6.5B (total $22.4B), plus additional hyperscaler wins. Translation: multi‑year demand is locked in.

🧱 Timing hit, not demand hit. Because one developer ran behind, CoreWeave cut FY25 revenue to $5.05–$5.15B and CapEx to $12–$14B; the affected customer extended timelines to preserve full contract value, deferring rev mostly to Q1’26.

🚀 CapEx blast‑off next year. Management said 2026 CapEx will be “well in excess of double” 2025—think $25–$30B+—to stand up capacity for that backlog. Read‑through: power, racks, cooling, and GPUs remain the bottleneck—and the opportunity.

🧩 Customer risk dramatically lower. Single‑customer concentration fell from ~85% at the start of 2025 to ~35% in Q3; 60%+ of backlog is now with investment‑grade customers. That’s real durability for revenue quality.

💰 Profit engine revs (with high interest drag). Q3 revenue $1.36B (+134% YoY); Adj. EBITDA $838M (61%); Adj. Op. Income $217M (16%); GAAP net loss $110M. FY25 interest expense guided to $1.21–$1.25B, a key earnings headwind to watch.

🔌 Infrastructure scale‑up continues. Active power ~590 MW (+120 MW q/q), contracted power ~2.9 GW, 41 data centers. Q3 CapEx $1.9B with construction‑in‑progress $6.9B (+$2.8B q/q)—that’s future capacity not yet in service.

🤝 NVIDIA “backstop” becomes a growth wedge. A $6.3B strategic collaboration lets CoreWeave interrupt and resell NVIDIA‑reserved capacity to smaller AI labs while NVIDIA underwrites unused capacity—mitigating utilization risk and opening a long‑tail of customers.

🎯 Year‑end operating targets. Company now expects >850 MW of active power by year‑end; FY25 adjusted operating income guided to $690–$720M.

Why AI/Semiconductor Investors Should Care

CoreWeave’s print screams structural demand: a $55.6B backlog, Blue‑chip customers, and a 2026 CapEx surge point to sustained GPU, networking, and power gear orders—bullish for suppliers up and down the AI stack. The bear case is execution risk: industry‑wide constraints in powered shells, transformers, and contractors inject volatility, while $1.2B in annual interest keeps the EPS bridge tight. The NVIDIA backstop/interruptible structure lowers utilization risk and broadens TAM into startups—an underappreciated edge—yet the market will keep scoring CoreWeave quarter‑to‑quarter on how fast new MWs energize and how quickly deferred revenue shows up in Q1’26.

Youtube Channel - Jose Najarro Stocks

X Account - @_Josenajarro

NEW SEMICONDUCTOR COMMUNITY — 50% OFF FOUNDING RATE

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Disclaimer: This article is intended for educational and informational purposes only and should not be construed as investment advice. Always conduct your own research and consult with a qualified financial advisor before making any investment decisions.

Hey, great read as always. The scale of these commitments, especially CoreWeave's backlog, is truly mind-boggling. Do you think the talent pipeline for AI engineers can actually keep pace with this explosive demand, or will that be the next botleneck? Your insights always hit the nail on the head.