Meta Buys AI Agents, NVIDIA Crushes TCO Math, and Applied Digital's Reverse Merger Play

Welcome, AI & Semiconductor Investors,

Meta just wrote a check to fast-track its enterprise ambitions with an agent startup that’s already processed 147 trillion tokens. NVIDIA’s getting independent receipts proving its “expensive” chips actually cost 1/15th per token versus AMD. And Applied Digital is carving out its cloud business into a reverse-merger special—complete with a medtech shell company and a 97% ownership stake. — Let’s Chip In.

What The Chip Happened?

🤖 Meta Acquires 147T-Token Agent Beast to Chase Enterprise Dollars

⚡ NVIDIA’s GB200 Delivers 28x Performance—And 15x Better Economics

🔄 Applied Digital Spins Cloud Biz Into ChronoScale via Reverse Merger

Read time: 7 minutes

Join WhatTheChipHappened Community — 15% OFF Annual Use Code CHIPS

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

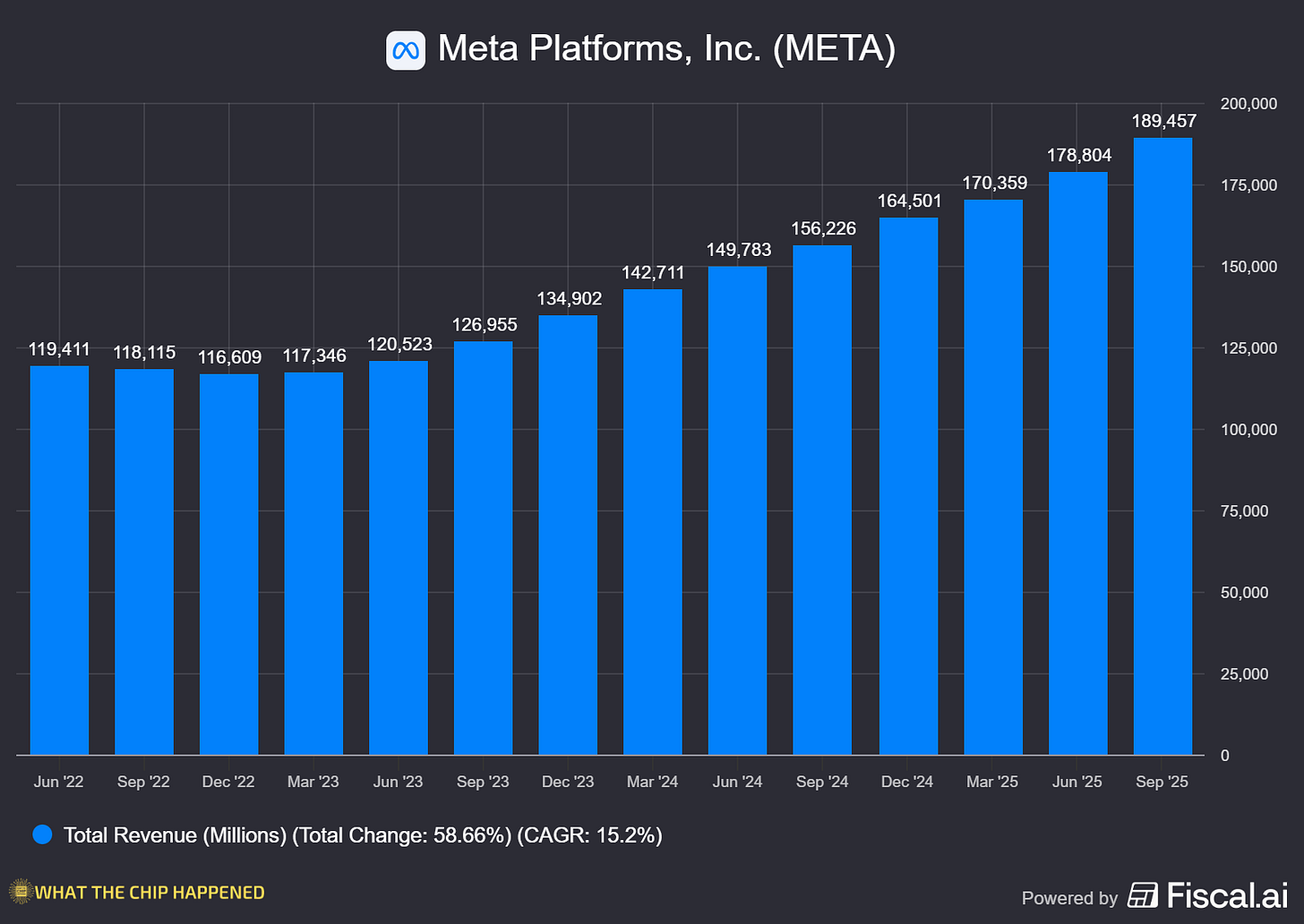

Meta Platforms Inc. (NASDAQ: META)

🤖 Meta Buys Manus AI: 147 Trillion Tokens and an Enterprise Appetite

What The Chip: Meta scooped up Manus, an autonomous AI agent startup that’s already processed over 147 trillion tokens and spun up 80 million virtual computers since launching its General AI Agent earlier this year. Unlike typical acqui-hires where the product gets sunset, Manus will keep operating as a standalone service while its tech gets baked into Meta AI and the company’s broader consumer and business products. This is Meta planting a flag in the enterprise AI agent wars, a market Microsoft and OpenAI currently own.

Details:

🚀 Scale Before Exit: Manus hit 147T+ tokens served and 80M+ virtual computers created in less than a year, demonstrating product-market fit that justified Meta writing the check rather than building from scratch.

🤖 Autonomous Everything: The platform handles market research, coding, data analysis, and complex multi-step workflows independently, not just chat completion, but actual task execution that enterprise customers pay real money for.

💼 Enterprise Focus Explicit: Meta’s announcement specifically highlights “unlocking opportunities for businesses”. This isn’t about making Llama chat better, it’s about chasing business market tools that complement its ad business.

📈 Daily Usage at Scale: Already serving “millions of users and businesses worldwide” daily, meaning Meta acquired a revenue-generating business, not a prototype in someone’s garage.

💰 Standalone Revenue Preserved: Unlike most Meta acquisitions, Manus continues operating and selling its service independently, meaning Meta sees immediate monetization potential worth preserving during integration.

🏗️ Talent Acquisition Multiplier: The Entire Manus team is joining Meta to accelerate general-purpose agent development, acquiring both technology and the team that knows how to ship it at scale.

Why AI/Semiconductor Investors Should Care: This acquisition signals Meta’s serious about diversifying revenue beyond advertising into enterprise AI services. For semiconductor investors, enterprise agent adoption drives sustained high-margin inference compute demand that runs 24/7, unlike chatbot queries. The 147T token milestone provides a reference point for scaling economics as more enterprises deploy autonomous agents. Watch whether Meta leverages its infrastructure cost advantages and Llama ecosystem while maintaining margin, which would accelerate enterprise AI adoption and drive incremental chip demand across the market.

Join WhatTheChipHappened Community — 15% OFF Annual Use Code CHIPS

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

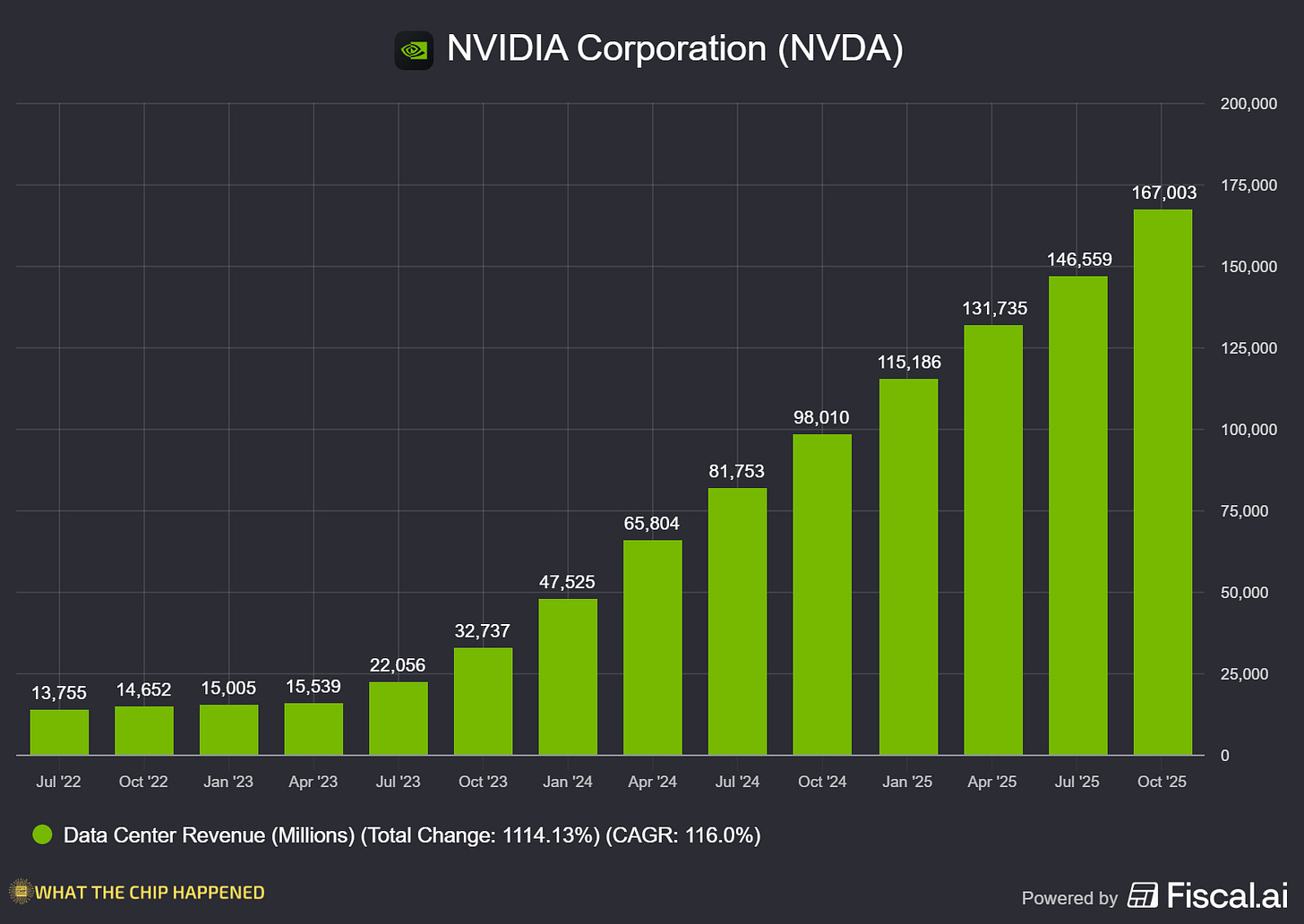

NVIDIA Corporation (NASDAQ: NVDA)

⚡ Independent Research Proves NVIDIA’s “Expensive” Chips Cost 1/15th Per Token

What The Chip: Signal65, the research firm run by former Intel and AMD executive Ryan Shrout, published a comprehensive third-party analysis demolishing the “NVIDIA is expensive” narrative. Their testing shows NVIDIA’s GB200 NVL72 rack-scale system delivers up to 28x the performance of AMD’s MI355X on frontier reasoning workloads like DeepSeek-R1. Despite nearly 2x higher per-GPU pricing ($16 vs $8.60/hour), NVIDIA’s architecture delivers as low as 1/15th the cost-per-token on high-interactivity AI reasoning, the workloads that now represent over 50% of all tokens served according to OpenRouter data.

Details:

🔬 Performance Crush: GB200 NVL72 delivers 5.9x to 28x better per-GPU performance than AMD MI355X on DeepSeek-R1, depending on required tokens-per-second-per-user—advantage widens dramatically as interactivity demands increase.

💰 TCO Inversion: Despite 1.86x higher per-GPU hourly cost, NVIDIA achieves up to 15x better performance-per-dollar due to throughput advantages. Optimizing for GPU price instead of token economics is the strategic mistake buyers make.

🏗️ Rack-Scale Moat: All 8-GPU systems, both NVIDIA B200 and AMD MI355X, hit communication bottlenecks when scaling MoE reasoning models; only NVL72’s 72-GPU NVLink domain at 130 TB/s solves the expert-routing problem these architectures create.

🤖 MoE Gap Explodes: On dense models like Llama 3.3 (70B), NVIDIA leads by 1.8-6x; on mixture-of-experts reasoning models, that advantage expands to 6-28x, and 12 of the top 16 open-weight models are now MoE architectures.

📈 Reasoning Is The Market: OpenRouter data shows 50%+ of all tokens now route through reasoning models. This isn’t a niche workload; it’s becoming the primary revenue driver for AI infrastructure.

⚡ Interactivity Ceiling: GB200 NVL72 achieves 275+ tokens/sec/user while MI355X peaks at 75 tokens/sec/user. NVIDIA can deliver user experiences that AMD architecturally cannot match today on frontier models.

🚀 Generational Economics: GB200 NVL72 delivers ~20x performance improvement over H100 while costing only 1.67x more per GPU-hour, translating to ~12x better performance-per-dollar versus the prior generation—Hopper customers have a massive upgrade incentive.

🔮 AMD’s Helios Gambit: Report notes AMD’s upcoming Helios rack-scale platform may close gaps “over the next 12 months,” but NVIDIA’s Vera Rubin ships on a similar timeline.

Why AI/Semiconductor Investors Should Care: This independent analysis directly challenges the core bull thesis for AMD and other NVIDIA competitors, that price-per-GPU advantage translates to customer wins. For frontier MoE reasoning workloads that now dominate inference economics, NVIDIA’s rack-scale architecture delivers better unit economics despite higher sticker prices. This validates NVIDIA’s pricing power sustainability and explains why hyperscalers keep choosing NVIDIA despite vocal cost concerns. The catch: This focuses on bleeding-edge reasoning models; AMD may still win cost-sensitive dense model deployments. Watch whether AMD’s Helios platform can narrow the MoE gap before Vera Rubin extends NVIDIA’s lead and whether enterprises prioritize lowest TCO or lowest upfront cost in buying decisions.

Join WhatTheChipHappened Community — 15% OFF Annual Use Code CHIPS

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

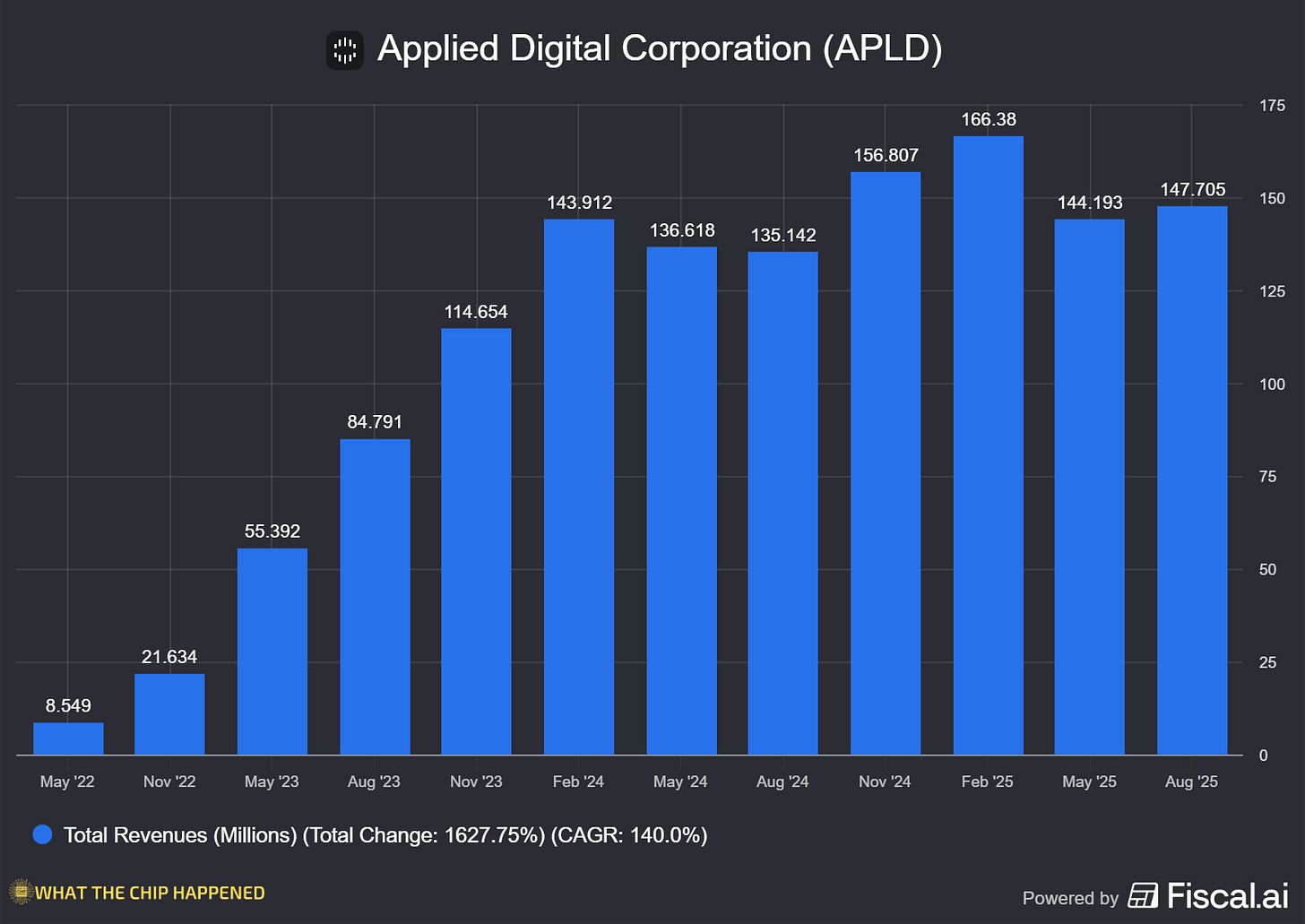

Applied Digital Corporation (NASDAQ: APLD)

🔄 Applied Digital Spins GPU Cloud Into ChronoScale via Reverse Merger Special

What The Chip: Applied Digital is carving out its cloud computing division and merging it with Nasdaq-listed EKSO Bionics, a medical exoskeleton company, in a reverse merger that creates ChronoScale Corporation, a pure-play GPU cloud platform targeting AI workloads. Post-closing, APLD shareholders would own roughly 97% of the new entity, while Applied Digital would retain its data center development business. The deal structure is classic SPAC-adjacent financial engineering: separate the high-multiple cloud story from the capital-intensive real estate story, give each its own public currency, and let the market re-rate both on a sum-of-the-parts basis. EKSO gets to explore selling its legacy medtech business while providing the shell.

Details:

📊 Meaningful Scale: Applied Digital Cloud generated $75.2 million in trailing twelve-month revenue as of August 31, 2025. This isn’t a startup spin; it’s a business with real revenue exiting the parent.

🚀 H100 Early Mover: Applied Digital Cloud was “among the first platforms to deploy NVIDIA’s H100 GPUs at scale in 2023”. Securing allocation early matters in capacity-constrained markets, a legacy advantage for ChronoScale.

🔄 Deal Structure: Non-binding term sheet for business combination; Applied Digital Cloud merges with EKSO, creating ChronoScale with APLD owning ~97%, essentially a dividend of new equity to existing shareholders.

🏗️ Strategic Separation Logic: Data center ownership (capital-intensive, long-cycle development) and cloud operations (software-forward, faster growth) get to “scale independently with distinct growth trajectories and capital flexibility.”

🔌 Synergy Preservation: ChronoScale expected to maintain “advantaged access” to Applied Digital’s expanding AI factory campus portfolio for faster capacity deployment—keeps strategic tie while separating equity structures.

⏰ Timeline and Contingencies: Expected H1 2026 close, subject to due diligence, binding docs, regulatory approval, shareholder votes—deal is non-binding, terms could shift or crater entirely before closing.

💼 EKSO’s Exit Path: EKSO Bionics continues to operate post-merger while exploring the sale of its legacy medtech business, a classic shell company that provides a public listing vehicle for private assets.

Why AI/Semiconductor Investors Should Care: This spin-merge creates a pure-play AI infrastructure public equity, potentially unlocking valuation multiple expansion if the market assigns ChronoScale a CoreWeave-style premium versus Applied Digital’s current blended multiple. The bull case: GPU cloud businesses trade at higher multiples than data center REITs, separation captures that arbitrage. The bear case: At $75M TTM revenue, ChronoScale would be small-cap in capital-intensive infrastructure competing against hyperscalers with infinite balance sheets. Watch final deal terms, any committed financing, customer concentration disclosure, and whether APLD announces additional GPU allocation commitments. If executed well, this could be legitimate value unlock; if botched, it’s financial engineering distracting from operating fundamentals.

Youtube Channel - Jose Najarro Stocks

X Account - @_Josenajarro

Join WhatTheChipHappened Community — 15% OFF Annual Use Code CHIPS

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Disclaimer: This article is intended for educational and informational purposes only and should not be construed as investment advice. Always conduct your own research and consult with a qualified financial advisor before making any investment decisions.