Micron's HBM Gamble, NVIDIA's $100B AI Bet, and IREN's Bold GPU Play: What's Next?

Welcome, AI & Semiconductor Investors,

Can Micron's momentum in HBM memory vault its margins toward 48% and cement its role in AI's trillion-dollar infrastructure surge? Meanwhile, NVIDIA's historic $100 billion bet on OpenAI promises to redefine the scale of AI compute—but execution risk looms large. Not to be overlooked, newcomer IREN makes a bold play by doubling its GPU cloud, potentially reshaping how investors view the AI infrastructure game. — Let’s Chip In.

What The Chip Happened?

🎯 Micron’s HBM Moment: Can a Beat‑and‑Raise Keep the Run Going?

⚡️ NVIDIA + OpenAI: 10GW Megabuild, $100B Bet on Compute

🧠 IREN doubles AI Cloud to 23k GPUs, targets >$500M ARR by Q1’26

[NVIDIA and Intel Forge x86–AI Partnership for PCs and Cloud]

Read time: 7 minutes

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Micron Technology (NASDAQ: MU)

🎯 Micron’s HBM Moment: Can a Beat‑and‑Raise Keep the Run Going?

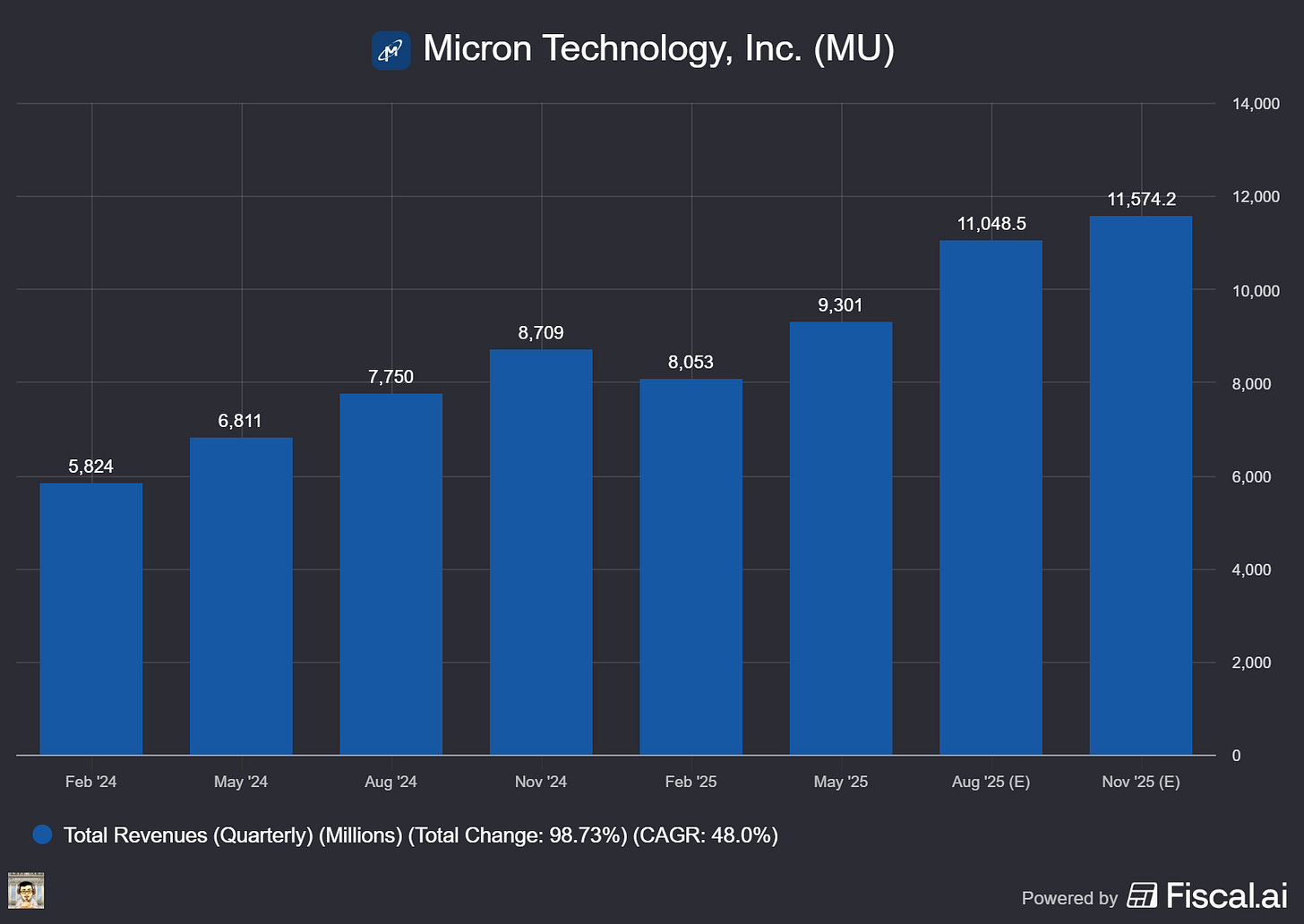

What The Chip: Micron reports today, Sept 23, 2025, after the close—webcast 4:30 pm ET, post‑earnings analyst call 6:00 pm ET. Management already raised Q4 guidance on Aug 11 to revenue $11.2B ±$0.1B, non‑GAAP GM 44.5% ±0.5pp, non‑GAAP EPS $2.85 ±$0.07, citing stronger DRAM pricing. Street sits around EPS $2.86 on $11.2B and will zero in on a FQ1 (Nov) outlook near ~$11.9B. Options price an ~±10% move.

Details:

💡 Set‑up & consensus. Aug‑11 guide lift to $11.2B / 44.5% GM / $2.85 EPS established the bar; FactSet consensus tracks that $2.86 on ~$11.2B view. First print focus: does revenue top $11.3B and GM push toward ~48%? Whisper for FQ1 ≈ $11.9B.

🚀 HBM execution & visibility. In Q3 (May), Micron posted record $9.30B revenue and said HBM revenue grew ~50% q/q. Management: “HBM3E 12‑high yield and volume ramp [is] progressing extremely well,” with shipment crossover in FQ4 and HBM share tracking to overall DRAM share in 2H‑CY25. 2025 HBM is sold out, and checks point to 2026 capacity lined up with multiple customers; TrendForce pegs Micron ~23–24% HBM share by YE25.

📈 Pricing tailwind. DRAM & NAND contract prices tightened into Q3 and Q4 as AI server demand pulled supply; multiple trackers now flag double‑digit q/q DRAM increases and NAND up mid‑single to low‑double digits into 2H. That backdrop supports higher ASPs into the print and guide.

🧱 Mix & margins. Bulls want a GM ≥47–48% to extend the run; NAND margins still lag DRAM, so mix (HBM/DDR5 vs legacy) matters. Morgan Stanley floated $12.5B as a stretch revenue case for Q4, while also flagging potential HBM3E price pressure as more supply arrives.

🧭 FQ1 (Nov) outlook. The market likely rewards a guide ≥$12B (AI + pricing power acceleration). A ~$11.5–11.9B outlook keeps the “orderly upcycle” narrative intact; anything lighter risks profit‑taking with options implying ~±10% moves.

🏗️ Capex & U.S. build‑out. **FY25 capex ≈ **$14B—“overwhelming majority” to HBM (facilities, back‑end, R&D). Micron’s U.S. expansion is backed by up to $6.1B in CHIPS Act incentives for Idaho + New York fabs. Expect color on FY26 capex in the calls.

🧩 Org & customers. Micron formed a Cloud Memory Business Unit this spring to focus on hyperscalers and HBM—read‑through for customer engagement breadth (NVIDIA, AMD, plus AI ASICs).

🧾 Taxes, tariffs & geopolitics. FY26 tax rate steps up to the high‑teens with Singapore’s global minimum tax (a modest EPS headwind). Management also noted new‑tariff impacts aren’t in guidance; China’s limits on Micron sales to critical infrastructure remain a structural overhang.

⚠️ HBM4 watch‑outs. Street will listen for HBM4 timing/specs and any rebuttal to questions about meeting higher speed requirements; SK hynix’s HBM4 posture intensifies competition into 2026.

Why AI/Semiconductor Investors Should Care

HBM is the chokepoint for AI accelerators; sold‑out 2025 HBM and a robust 12‑Hi ramp suggest Micron can expand mix and margins as AI datacenter capex stays elevated into 2025—and potentially into 2026 as contracts roll. The gross‑margin print and FQ1 guide are the stock’s near‑term catalysts; a GM ≥~48% with a ≥$12B Nov‑quarter guide would validate pricing power + HBM share gains and carry read‑throughs to hyperscaler buildouts, GPU supply chains, and semi‑cap names tied to HBM capacity adds.

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

NVIDIA (NASDAQ: NVDA)

⚡️ NVIDIA + OpenAI: 10GW Megabuild, $100B Bet on Compute

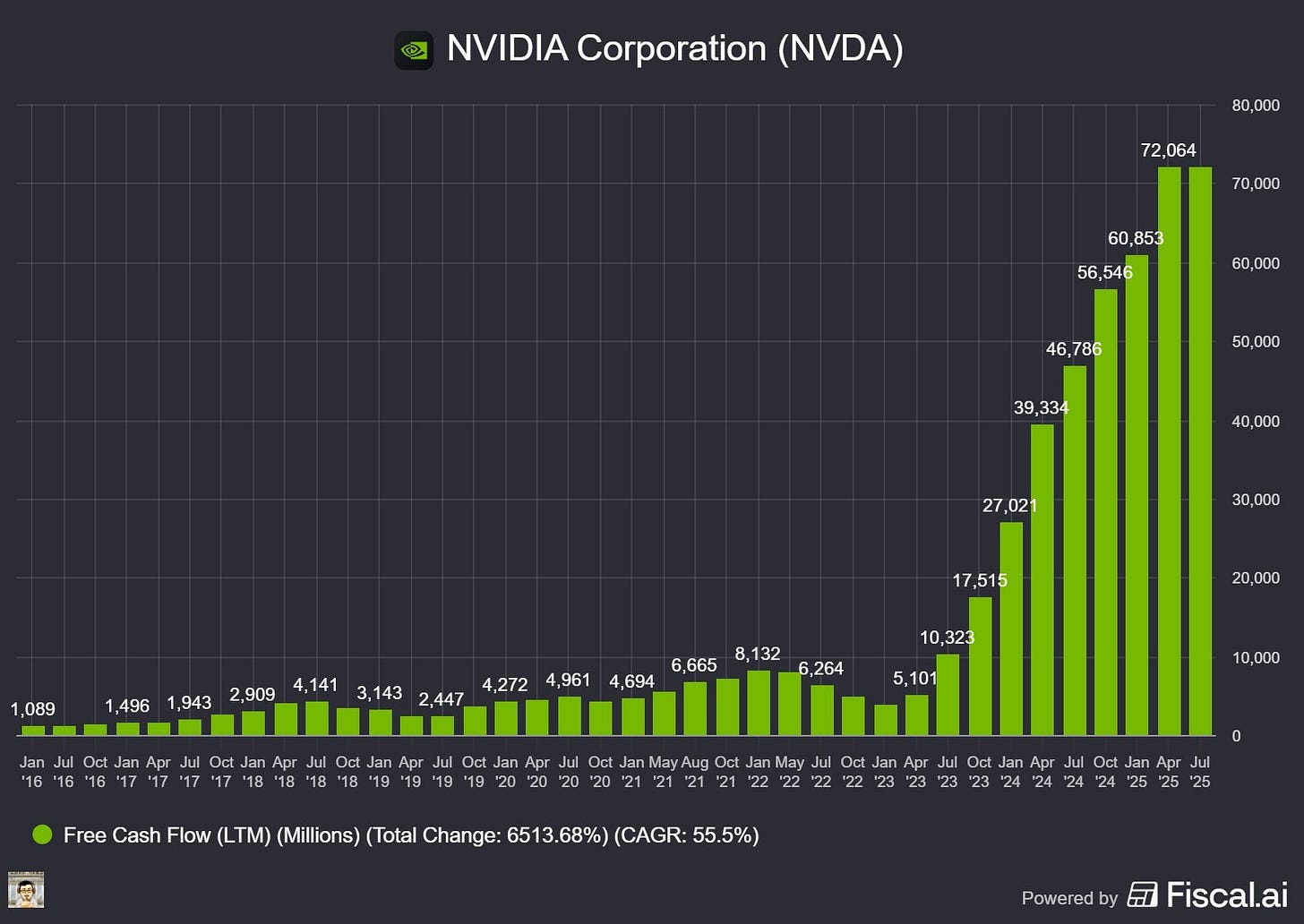

What The Chip: On September 22, 2025, OpenAI and NVIDIA signed a letter of intent to deploy at least 10 gigawatts (GW) of NVIDIA systems—millions of GPUs—for OpenAI’s next‑gen AI infrastructure. NVIDIA also intends to invest up to $100B in OpenAI progressively as each gigawatt is deployed; the first 1GW arrives in H2 2026 on NVIDIA’s Vera Rubin platform.

Details:

🧾 Deal basics: The LOI targets ≥10GW of NVIDIA systems to train and run OpenAI’s next wave of models on the path toward “superintelligence,” with financing tied to each GW that comes online. Millions of GPUs will power the build.

🗓️ Timeline: Phase one turns on in H2 2026 using the Vera Rubin platform. (Think of a GW as the amount of grid power the AI factories can draw at once.)

🧱 Not (yet) binding: It’s a letter of intent—a major signal, but generally not legally binding. Execution risk (sites, power, permitting, supply) still matters.

🧮 Scale of silicon: Jensen Huang told media the project could encompass as many as ~5 million NVIDIA chips—underscoring unprecedented compute demand.

🧠 Platform clarity: Vera Rubin is NVIDIA’s 2026 AI platform combining Rubin GPUs and Vera CPUs; an NVL144 CPX rack integrates Rubin + Rubin CPX + Vera to deliver ~8 exaFLOPs per rack and ~100TB fast memory—designed for long‑context, reasoning‑heavy AI. (Rubin succeeds Blackwell.)

🤝 Preferred partner status: OpenAI named NVIDIA its preferred strategic compute & networking partner; the two will co‑optimize roadmaps. This complements work with Microsoft, Oracle, SoftBank and “Stargate” partners.

➕ “Additive,” not a swap: In a same‑day CNBC interview, Huang emphasized the new data centers are additive to previously announced and contracted capacity—on top of what investors already expect.

📈 User demand backdrop: OpenAI cites ~700M weekly active users for ChatGPT—fueling the need for more training and inference capacity. OpenAI+1

⚡ Power reality check: 10GW ≈ the output of ~10 utility‑scale nuclear reactors—a reminder that power availability and grid interconnects are critical path risks.

🗣️ Management said it plainly: “Deploying 10 gigawatts to power the next era of intelligence,” said Jensen Huang (NVIDIA CEO). “Everything starts with compute,” added Sam Altman (OpenAI CEO). Greg Brockman (OpenAI president) said they’re “excited to deploy 10GW of compute with NVIDIA.”

Why AI/Semiconductor Investors Should Care

For NVDA, this is a multi‑year demand pipeline tied to the Vera Rubin cycle—potentially millions of accelerators plus networking and systems revenue, on top of existing backlogs. The “additive” framing matters: it implies incremental shipments beyond prior public run‑rate expectations.

For the ecosystem, the plan reinforces NVIDIA’s platform pull and raises the bar for rivals across HBM memory, advanced packaging, photonic/ethernet fabrics, and power infrastructure. But it’s still an LOI—site selection, energy procurement, permitting, and capital markets discipline remain gating factors.

Watch for: (1) firm purchase agreements and locations; (2) HBM4/Rubin supply ramp milestones; (3) how Microsoft, Oracle, and “Stargate” capacity interlocks with OpenAI’s own AI factories; and (4) any regulatory scrutiny around a supplier taking a non‑controlling equity stake in a top customer.

IREN Limited (NASDAQ: IREN)

🧠 IREN doubles AI Cloud to 23k GPUs, targets >$500M ARR by Q1’26

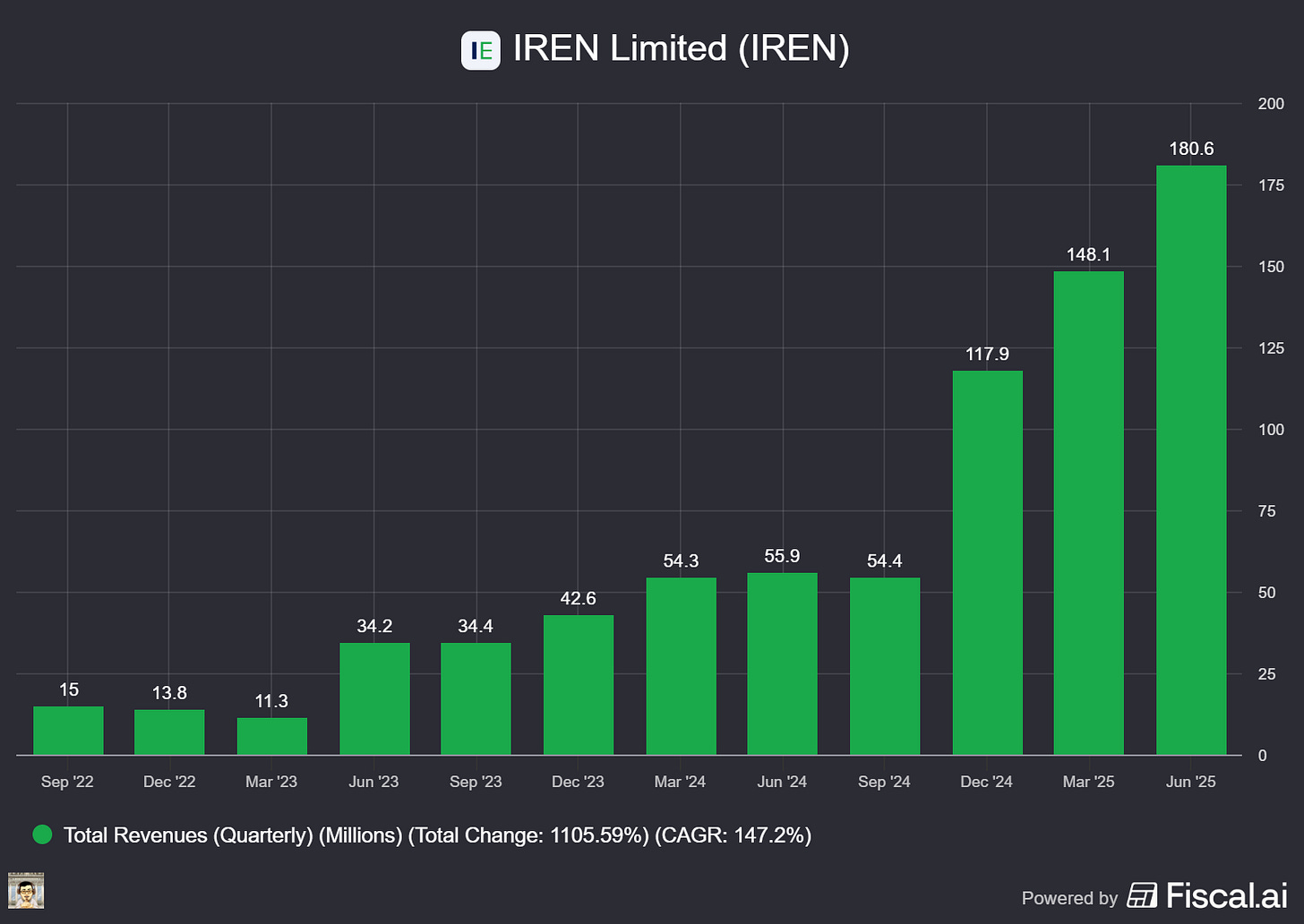

What The Chip: On Sept. 22, 2025, IREN said it doubled its AI Cloud to ~23,000 GPUs after ordering 12.4k more GPUs and now aims for >$500M AI Cloud ARR by Q1 2026. Deliveries will roll into the company’s Prince George (B.C.) campus over the coming months.

Details:

🔢 What they bought: 7,100 NVIDIA B300s, 4,200 NVIDIA B200s, and 1,100 AMD MI350Xs for ~$674M (includes CPUs, storage, networking, onsite services). That lifts the fleet to ~23k GPUs.

(Back‑of‑envelope: ~$674M / 12,400 GPUs ≈ $54.4K per GPU average.)

🧩 Fleet mix (approx.): B200/B300 83% (19.1k), H100/H200 8% (1.9k), GB300 (liquid‑cooled Blackwell) 5% (1.2k), AMD MI350X 4.8% (1.1k)—broadening vendor options and workload fit.

🚚 Where it’s going: Staged deliveries into IREN’s Prince George, B.C. campus; the broader B.C. footprint can support >60k Blackwell GPUs at maturity, per company estimates.

💵 Revenue math & caveats: IREN is targeting >$500M ARR by Q1’26, based on contracted GPU‑hour pricing and internal utilization assumptions; not fully contracted and assumes on‑time delivery/commissioning. (Our math: $500M / 23k GPUs ≈ $21.7K per GPU/year, ≈ $2.48/GPU‑hour at 100% utilization.)

🗣️ Management says: “Customers are increasingly seeking partners who can deliver scale quickly,” noted Daniel Roberts, IREN’s co‑founder & co‑CEO.

🧱 Financing & Bitcoin mining: Financing “workstreams” are underway to fund growth; any impact on Bitcoin mining is expected to be mitigated by redeploying ASICs to other sites.

🧪 AMD angle (notable): IREN adds AMD MI350X to a stack previously centered on NVIDIA—making it one of the earlier ‘neocloud’ providers to include next‑gen AMD accelerators. Peers have begun similar moves (e.g., Crusoe disclosed a large MI355X order in June). This multi‑vendor strategy can hedge supply and pricing risk.

📈 Market reaction: Headlines around the expansion pushed shares higher in early trading following the release.

Why AI/Semiconductor Investors Should Care

This is a scaled bet on Blackwell‑class capacity with early AMD exposure. If IREN converts staged deliveries and pre‑contracts into high utilization, >$500M ARR by Q1’26 meaningfully diversifies the business beyond Bitcoin and supports a higher infrastructure valuation multiple. The AMD MI350X addition matters because a working ROCm ecosystem plus high‑memory accelerators gives AI customers NVIDIA alternatives—a lever on supply, pricing, and performance per watt—while the NVIDIA Preferred Partner status (Aug. 28) helps secure top‑tier supply. Key risks: financing and build‑out timing, software stack maturity for AMD, and the gap between targeted ARR and contracted revenue.

Youtube Channel - Jose Najarro Stocks

X Account - @_Josenajarro

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Disclaimer: This article is intended for educational and informational purposes only and should not be construed as investment advice. Always conduct your own research and consult with a qualified financial advisor before making any investment decisions.

The overview above provides key insights every investor should know, but subscribing to the premium tier unlocks deeper analysis to support your Semiconductor, AI, and Software journey. Behind the paywall, you’ll gain access to in-depth breakdowns of earnings reports, keynotes, and investor conferences across semiconductor, AI, and software companies. With multiple deep dives published weekly, it’s the ultimate resource for staying ahead in the market. Support the newsletter and elevate your investing expertise—subscribe today!

[Paid Subscribers] NVIDIA and Intel Forge x86–AI Partnership for PCs and Cloud

Date of Event: September 18, 2025

Executive Summary

*Reminder: We do not talk about valuations, just an analysis of the earnings/conferences

On a joint special call, NVIDIA CEO Jensen Huang and Intel CEO Lip‑Bu Tan announced a multi‑year collaboration to co‑develop custom x86 central processing units (CPUs) for NVIDIA’s data‑center AI platforms and new client system‑on‑chips (SoCs) that integrate NVIDIA graphics processing unit (GPU) chiplets for personal computers. Huang framed the deal as “a historic partnership to jointly develop multiple generations of x86 CPUs for data centers and PC products,” adding that NVIDIA will “become a very large customer of Intel CPUs.” The companies positioned the collaboration against large, quantifiable opportunities: Huang cited a data‑center CPU market “about $25 billion” annually and said the partnership aims to “address some $25 billion, $50 billion of annual opportunity,” while pointing to “150 million laptops sold each year” as the client total addressable market (TAM). Intel emphasized that “NVIDIA is an investor to Intel,” with Huang saying NVIDIA is “delighted to be a shareholder,” underscoring commitment beyond product plans.

Growth Opportunities

AI infrastructure. NVIDIA’s current flagship scale‑up design—what Huang called “NVLink 72 rack‑scale computers”—lets an entire rack behave “as if it’s one giant computer, one giant GPU.” Until now, NVIDIA achieved that with its own ARM‑based CPU (Grace/Vera) tightly coupled to its GPUs. x86 servers typically attach GPUs over PCI Express (PCIe), which Huang said limited scale‑up to “NVLink 8.” The partnership moves x86 into NVIDIA’s NVLink ecosystem, enabling x86‑based racks to scale “also to NVLink 72.” In plain terms: more bandwidth and tighter CPU‑GPU coupling should allow larger AI models and faster training/inference on familiar x86 infrastructure, broadening the market segments and customers NVIDIA and Intel can serve together.