Oracle Stumbles, Broadcom Soars, and China Ghosted Nvidia's H200

Welcome, AI & Semiconductor Investors,

Oracle’s AI buildout hits labor and material walls. Broadcom prints a $73 billion backlog that won’t stop growing. And Nvidia gets the green light to sell H200s to China, except Beijing might not pick up the phone. Three stories that reveal where AI infrastructure is overheating, where it’s printing money, and where geopolitics just made everything messier. — Let’s Chip In.

What The Chip Happened?

🚨 Oracle’s $300B OpenAI Dream Hits 2028 Delays

🚀 Broadcom’s AI Revenue Doubles as $73B Backlog Explodes

🇨🇳 Trump Greenlights H200 China Sales—But Beijing Says No Thanks

Read time: 7 minutes

Join WhatTheChipHappened Community — 33% OFF Annual Use Code CYBER

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Oracle Corporation (NASDAQ: ORCL)

🚨 Oracle’s $300B OpenAI Data Centers Pushed to 2028 Amid Labor Crunch

What The Chip: Reports showing that Oracle just admitted what many feared: building AI infrastructure at hyperscale is harder than signing the contracts. The company pushed back completion dates for several OpenAI data centers from 2027 to 2028, citing shortages of skilled labor and materials. This delay compounds a brutal earnings aftermath where shares crashed 15% post-Q2 and now sit 45% below September highs. The Abilene facility remains on track, but the cracks in Oracle’s $300 billion AI infrastructure buildout are showing.

Details:

⚠️ Delay Timeline: Multiple large-scale AI data centers Oracle is constructing for OpenAI have been pushed from 2027 to 2028 delivery. The facilities are part of the Stargate initiative to deploy two million AI accelerators and 5 GW of power capacity.

🔨 Root Cause Ambiguity: Bloomberg’s sources blame “labor and materials” shortages, but Oracle hasn’t clarified whether this means construction workers, specialized data center equipment, or both. That vagueness is concerning—it suggests Oracle doesn’t control the bottleneck.

🛡️ Oracle’s Denial: The company issued a Friday denial of the delay report, but shares still fell 5% that day following a 10% drop the day before. When your stock is down 45% in three months, denials don’t reassure anyone.

💰 Debt Market Panic: The cost of insuring Oracle’s debt against default hit a five-year high Thursday and climbed again Friday. Credit markets are pricing in real execution risk on a company carrying $111.6 billion in debt.

📈 Capex Surge: Oracle now projects $50 billion in full-year capital expenditures, up from $35 billion as of September. That’s a 43% increase in capex guidance in just one quarter—a sign of either ambition or desperation.

📉 Q2 Revenue Miss: Oracle reported $16.06 billion in revenue versus $16.21 billion expected, though adjusted EPS of $2.26 crushed the $1.64 estimate. The revenue miss is what tanked the stock, AI investors care about top-line growth, not bottom-line engineering.

🚩 Analyst Exodus: At least 13 brokerages slashed price targets post-earnings. Evercore cut its target from $385 to $275 while maintaining Buy, citing “long-term opportunity” but acknowledging near-term capex and leverage friction, Wall Street speak for “this is going to hurt.”

🎯 Abilene Still On Track: The first Stargate data center in Abilene, Texas remains on schedule, with 96,000 Nvidia chips already delivered. One facility doesn’t solve the narrative, but it’s the only execution win Oracle can point to right now.

⚛️ Infrastructure Bottleneck Signal: Analyst Bob O’Donnell nailed it: “Concerns about the ability to build data centers due to construction delays, power availability and other practical factors are becoming a much bigger factor than expected demands for AI capabilities.” Translation: demand isn’t the problem—physics is.

🔮 Concentration Risk: OpenAI itself is unprofitable and projected to spend over $1 trillion through 2030. Oracle’s backlog is impressive until you realize it’s concentrated on a customer burning cash and dependent on Microsoft’s capital patience. Rating agencies Moody’s and S&P have shifted to negative outlooks, warning net debt-to-EBITDA could exceed 4× by 2027-2028.

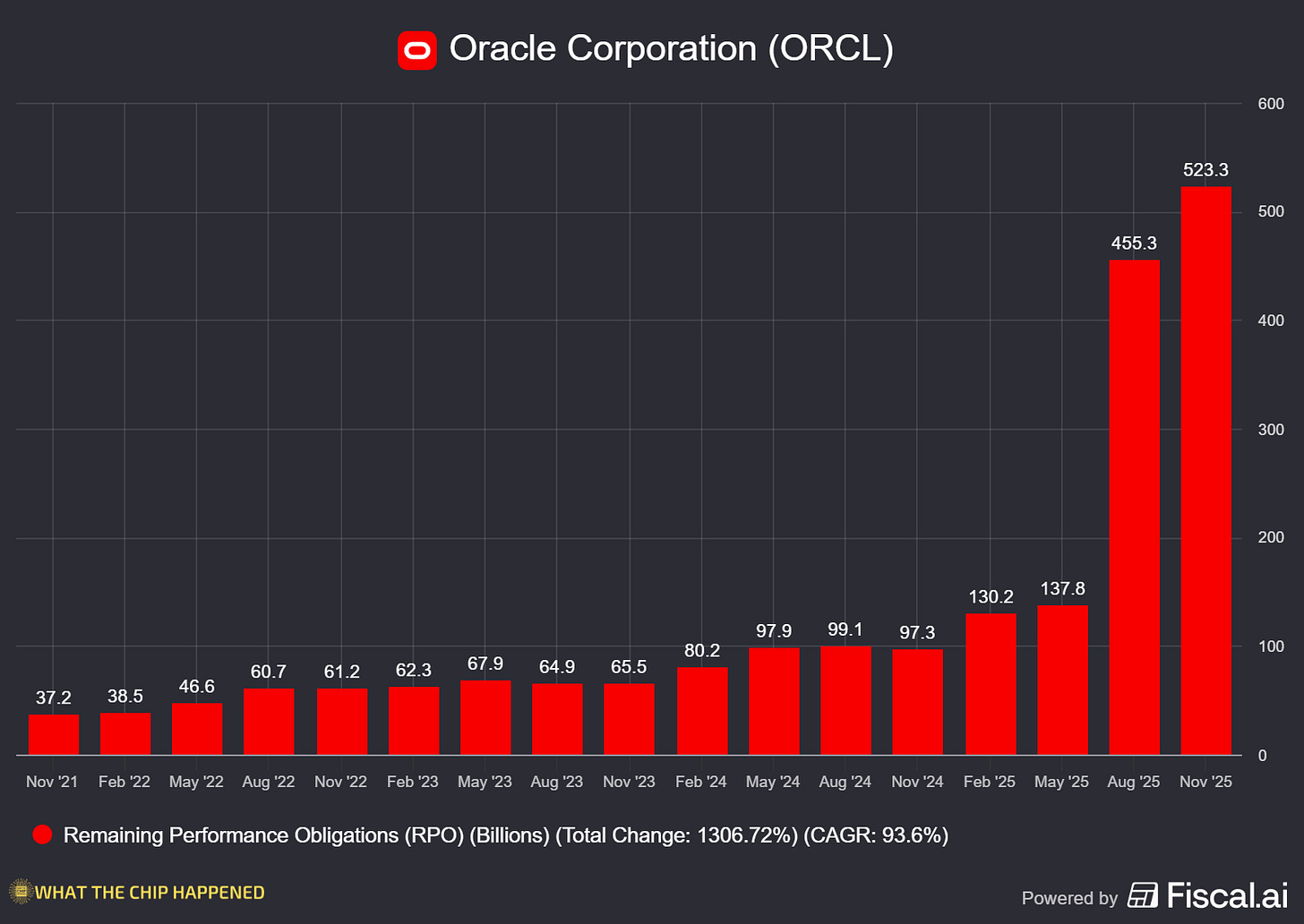

Why AI/Semiconductor Investors Should Care: Oracle’s stumble exposes the inconvenient truth of the AI buildout: chips aren’t the constraint anymore, construction crews, electrical transformers, and cooling systems are. The company’s $523 billion backlog (up 438% YoY) proves demand is real, but execution risk is mounting. Oracle is borrowing tens of billions to build infrastructure for an unprofitable customer in a market where power, labor, and materials are all bottlenecks.

Bulls will point to the backlog and Meta/NVIDIA commitments. Bears will point to the debt load and delivery delays. Watch infrastructure capex trends across hyperscalers, if Oracle’s problems are isolated, the stock is oversold. If they’re systemic, the entire AI infrastructure thesis needs repricing.

Join WhatTheChipHappened Community — 33% OFF Annual Use Code CYBER

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Broadcom Inc. (NASDAQ: AVGO)

🚀 Broadcom’s AI Revenue Doubles as $73B Backlog Signals Insatiable Demand

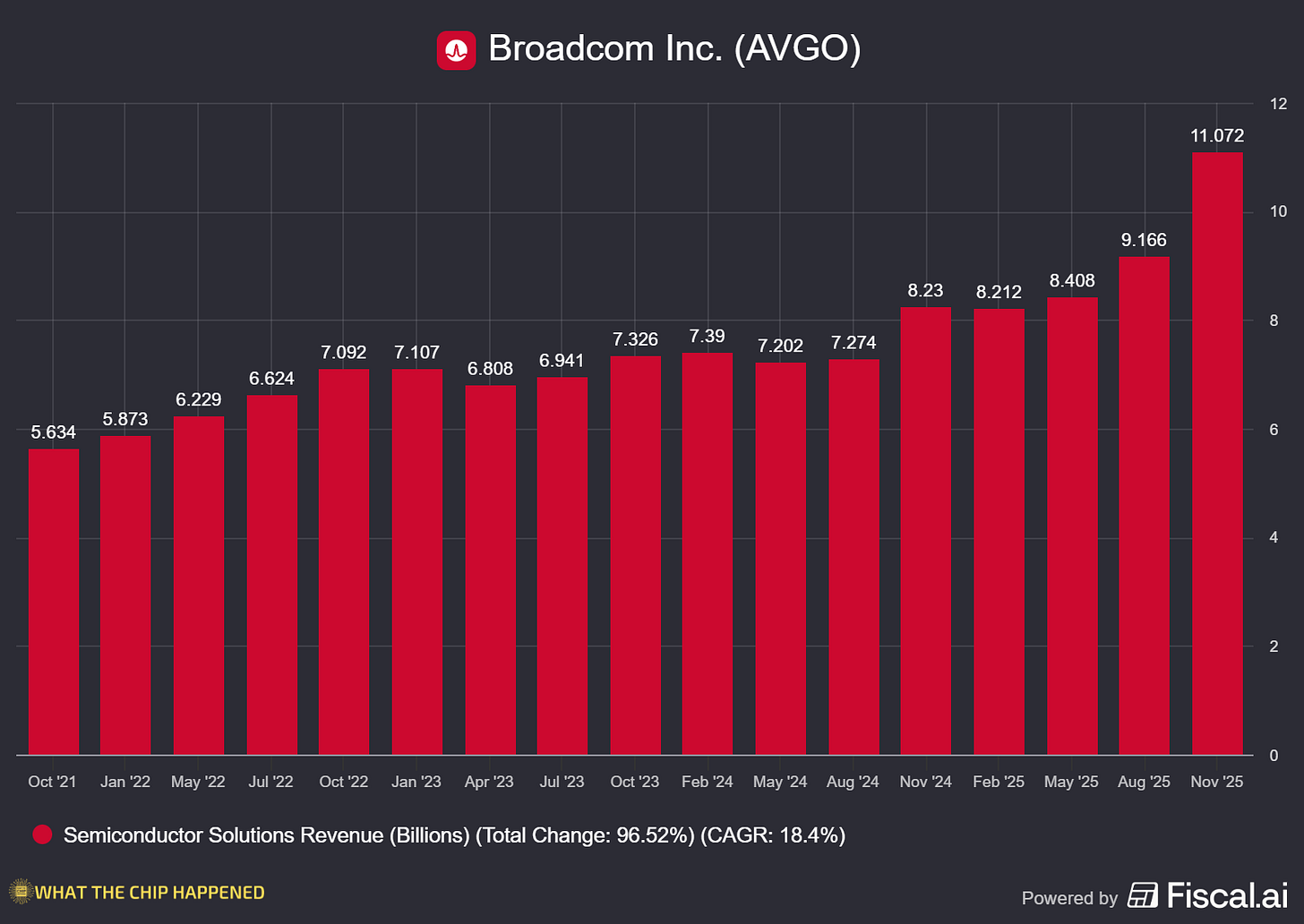

What The Chip: While Oracle struggles to build, Broadcom prints money designing what goes inside. The company crushed Q4 with AI semiconductor revenue surging 74% YoY to $6.5 billion, capping a fiscal year where AI revenue hit $20 billion—a 10x increase over just 11 quarters. The real story is acceleration: Q1 guidance calls for AI revenue to double year-over-year to $8.2 billion, with CEO Hock Tan confirming this pace will continue throughout FY26. A record $73 billion AI backlog stretching 18 months out proves hyperscaler demand shows no signs of slowing.

Details:

⚡ AI Revenue Acceleration: Q1 FY26 AI semiconductor guidance of $8.2B represents 100% YoY growth, up from initial 60-70% FY26 expectations just six months ago. Management buried the lede—they’re not slowing down, they’re speeding up.

💰 $73B Backlog Monster: Broadcom’s AI backlog alone comprises roughly half of its $162B total consolidated backlog, to be delivered over 18 months. Tan emphasized the backlog “keeps growing”—meaning the $73B is a floor, not a ceiling.

🤖 XPU Customer Expansion: Broadcom now serves five custom AI chip customers. The fourth customer (believed to be Anthropic) placed a $10B order in Q3 followed by another $11B in Q4. A fifth customer added a $1B order—Broadcom is becoming the custom silicon design house for every hyperscaler building alternatives to Nvidia.

🔥 Tomahawk 6 Demand: The 102 Tbps switch is seeing “bookings of a nature we have never seen,” per Tan. It’s the fastest deployment ramp for any switch product in company history—a sign that networking, not just compute, is the new gold rush.

🛡️ VMware Cash Machine: Infrastructure software delivered $6.9B revenue (beating $6.7B guidance) at 78% operating margin, up from 72% YoY. The VMware integration is complete, and it’s pure profit now—providing a margin cushion for AI semiconductor investments.

📈 Free Cash Flow Dominance: FY25 free cash flow hit $26.9B (42% of revenue), up 39% YoY. Broadcom returned $17.5B to shareholders via dividends and buybacks while funding $11B in R&D—this is what capital efficiency looks like.

💡 Dividend Aristocrat: 15th consecutive annual dividend increase, raising the quarterly payout 10% to $0.65/share ($2.60 annualized). Even as Broadcom invests billions in AI, it’s rewarding shareholders—confidence in sustainable cash generation.

⚠️ Margin Compression Coming: Gross margin expected to compress ~100bps in Q1 as AI mix rises and systems shipments begin in H2. This is the bull case headwind—more revenue from lower-margin products means profitability metrics will lag revenue growth.

🌍 Supply Response: Broadcom is building advanced packaging capacity in Singapore to address multi-chip custom accelerator demand.

🔵 Non-AI Stabilization: Non-AI semis flat YoY at ~$4.1B guidance. Broadband is recovering, but enterprise spending remains muted as “AI is sucking the oxygen” from other budgets. The company is 65% AI now, and that concentration is both strength and risk.

Why AI/Semiconductor Investors Should Care: Broadcom is the ultimate picks-and-shovels winner of the AI buildout, with diversified exposure across custom XPUs (Google TPU, Meta, ByteDance, Anthropic), networking (Tomahawk, Memory Link, optical), and high-margin VMware software. The $73B backlog provides visibility most semis can only dream of, and management’s confidence in accelerating growth through FY26 suggests hyperscaler capex isn’t peaking—it’s compounding.

The bear case is customer concentration (3-5 hyperscalers drive everything) and margin compression from systems revenue. But here’s the reality: Oracle is struggling to build the buildings; Broadcom is selling the brains that go inside them. One business model is asset-heavy and execution-risky. The other is design-focused and capital-efficient. Watch backlog trajectory and Tomahawk 6 shipment timing—this is the cleanest AI infrastructure play in the market right now.

Join WhatTheChipHappened Community — 33% OFF Annual Use Code CYBER

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Nvidia Corporation (NASDAQ: NVDA)

🇨🇳 Trump Greenlights Nvidia H200 China Sales—But Beijing May Say No Thanks

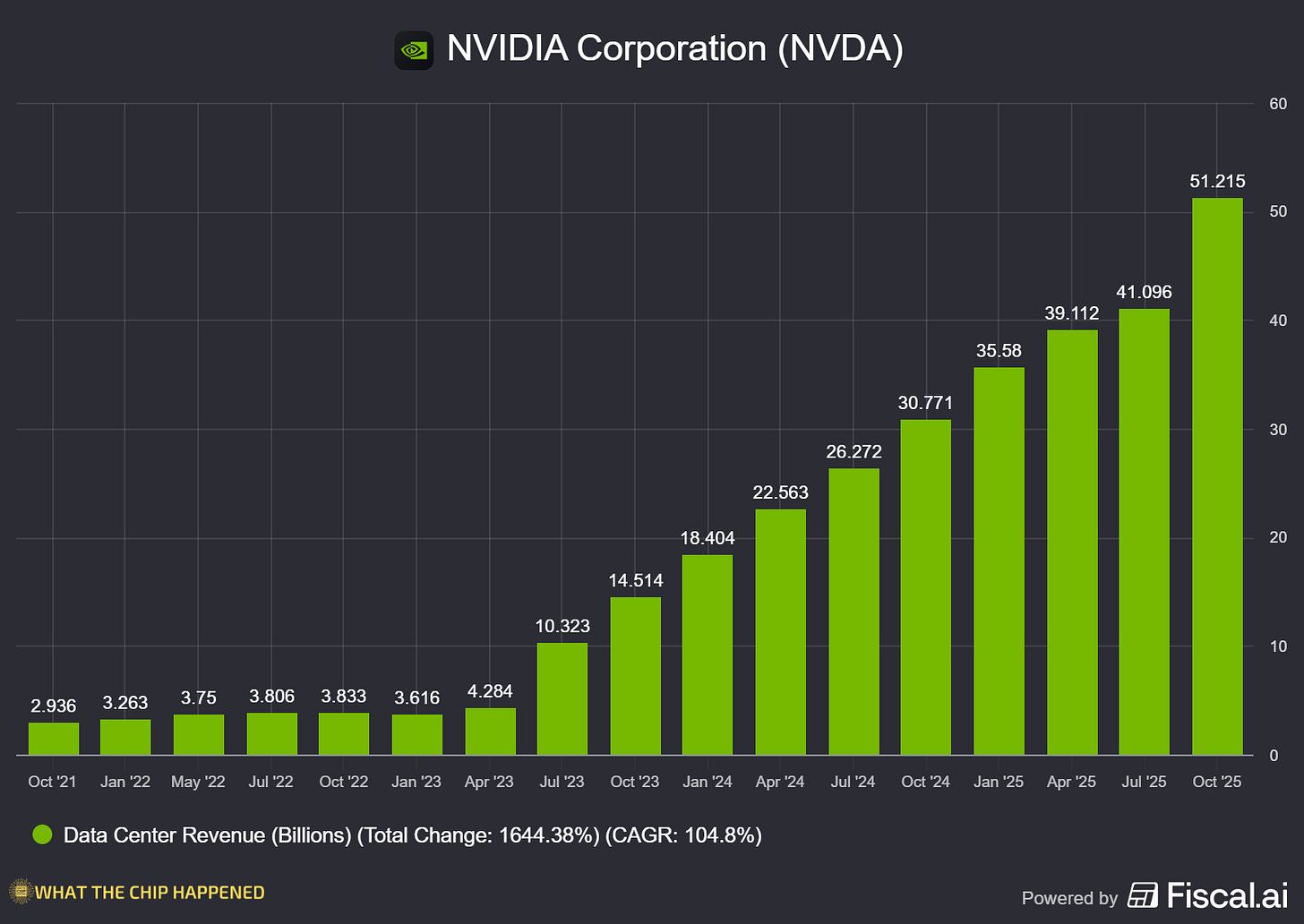

What The Chip: President Trump handed Nvidia a gift on December 8: permission to sell H200 AI chips to “approved customers” in China, with 25% of sales paid to the U.S. government. The H200 is roughly six times more capable than the H20 chips previously available to Chinese buyers, and CEO Jensen Huang has pegged the China AI chip market at $50 billion this year, with Bloomberg estimating H200 annual revenue at $10 billion. But there’s a problem: White House AI czar David Sacks says China has “figured out the U.S. strategy” and is rejecting the chip in favor of domestic alternatives.

Details:

🔄 Deal Structure: H200 chips manufactured by TSMC in Taiwan would be imported into the U.S. for security reviews, taxed at 25%, then re-exported to approved Chinese buyers. This convoluted routing adds cost, complexity, and delays—not exactly competitive with domestic options.

⚡ Performance Gap: The H200 features HBM3e memory with 4.8 TB/s bandwidth (up from H100’s 3.35 TB/s) and 141GB capacity versus 80GB. It’s Nvidia’s third-most powerful processor(Blackwell Ultra #1, Blackwell #2) and roughly six times more capable than the H20—a genuine technological leap.

🎯 Strategic Rationale: U.S. officials told The Information this was a middle ground: Blackwell remains banned, but a complete H200 ban would accelerate China’s domestic chip development. The logic: better to let them buy somewhat-restricted hardware than force self-sufficiency.

🚩 China’s Cold Shoulder: Beijing convened emergency meetings with its largest tech companies to assess demand. Proposals include capping Nvidia purchases relative to domestic accelerator buys, or barring H200 use in strategic sectors like finance and energy. Translation: we’ll grudgingly allow minimal imports, not embrace them.

💰 $70B Self-Sufficiency Push: China is weighing incentives worth as much as $70 billion to support local chipmaking. That’s more than Nvidia’s entire quarterly revenue—Beijing is dead serious about chip independence.

🔌 CUDA Advantage: Unlike most Chinese accelerators, the H200 supports Nvidia’s CUDA software ecosystem, simplifying model porting and cluster integration. This is Nvidia’s moat—even if Chinese chips match performance, CUDA lock-in is real.

⚠️ Smuggling Precedent: A recent case revealed individuals exported or attempted to export at least $160 million worth of H100 and H200 GPUs between October 2024 and May 2025. The gray market exists because demand exists—but it also proves enforcement is porous.

📉 Muted Market Reaction: Nvidia shares climbed on initial news, then pared gains and rose only ~2% after hours. The market isn’t pricing in a China windfall—it’s pricing in uncertainty.

🔮 Analyst Skepticism: Swissquote Bank notes the approval may have a limited impact unless Nvidia can export other chip lines like Blackwell or Rubin. One product line approval doesn’t reopen the Chinese market—it just creates a narrow, heavily monitored channel.

Why AI/Semiconductor Investors Should Care: Trump’s H200 approval is optionality, not certainty. Beijing’s rejection signals a strategic shift: China would rather accept near-term AI performance disadvantages than remain dependent on U.S. silicon. For Nvidia, this means the Chinese market, historically a major revenue driver, is functionally closed for the foreseeable future.

The bull case hinges on Chinese tech giants ultimately being unable to resist H200 performance for training workloads, especially given CUDA ecosystem advantages. The bear case is that Beijing doubles down on Huawei and Cambricon, permanently shutting out Nvidia while subsidizing domestic alternatives. Watch whether any major Chinese AI labs (ByteDance, Alibaba, Tencent) publicly commit to H200 orders—if they stay silent, the market is closed. Either way, Nvidia’s growth story is now 100% dependent on U.S., European, and allied demand.

Youtube Channel - Jose Najarro Stocks

X Account - @_Josenajarro

Join WhatTheChipHappened Community — 33% OFF Annual Use Code CYBER

Get 15% OFF FISCAL.AI — ALL CHARTS ARE FROM FISCAL.AI —

Disclaimer: This article is intended for educational and informational purposes only and should not be construed as investment advice. Always conduct your own research and consult with a qualified financial advisor before making any investment decisions.